This last weekend it was reported that over 7 million Venmo transactions had been openly available, and they were scraped by a computer science student. And he put them on GitHub. This wasn’t the first time - last year another data scientist scraped over 207 million.

Why was this possible?

The transactions were available via the Venmo Developer API, which has had little change between these two events. Here you go, how’s that for privacy?

How is this OK? On the one hand, you could argue that a few interesting projects have resulted, such as a Twitter bot that listens for drugs transactions. But there is no reason that an API with such sensitive information should be public.

Feeling Helpless

Although I don’t think (or at least I hope) that no specific individual can be hurt terribly by this, it’s wrong. It’s a violation of privacy. I have not used this service myself, but I know many that have. What can I do? I wanted to be empowered to know if anyone I cared about had their information compromised. If they had, I wanted to tell them so that they could be sure to take whatever action might be possible and necessary, even if it only means making their account private or discontinuing using the serve. Knowledge is power. At the time I had never really used MongoDB, so I didn’t know what I was doing. Like most things, I jumped in and had faith that I’d figure it out. So this is what we are going to do today.

- Using a Singularity container for MongoDB

- On a high performance computing cluster

- We will learn how to work with this data

- Because knowledge is power.

If you are interested in MongoDB and containers but don’t care about this particular venmo dataset, I’ve created a new repository of examples for singularity-compose, and the one called mongodb-pull includes instructions for venmo. If not, read on!

Feeling Empowered

First let’s grab an interactive node on a cluster. We will ask for more time and memory than we need.

$ srun --mem 64000 --time 24:00:00 --pty bash

Create a folder on scratch to work in

mkdir -p $SCRATCH/venmo

cd $SCRATCH/venmo

The data comes from this repository. You have to download it, and yes, it takes a while.

$ wget https://d.badtech.xyz/venmo.tar.xz

Next, extract it.

tar xf venmo.tar.xz

Pull the Singularity container for mongodb.

$ singularity pull docker://mongo

Create subfolders for mongodb to write data on the host. This is very important, because it means that you can bring the instance up and down, and not lose any of the data. You’ll only need to import it once.

mkdir -p data/db data/configdb

Enter the container

Shell into the container, binding data to /data, so we have write in the container (thanks to it being bound to the host).

$ singularity shell --bind data:/data mongo_latest.sif

Start the mongo daemon as a background process:

$ mongod &

Change directory into the “dump/test” folder that was exported (venmo.bson is here)

$ cd dump/test

And restore the data

$ mongorestore --collection venmo --db test venmo.bson

Query the data

Next, connect to a shell. It’s time to query!

$ mongo

>

Display the database we are using

> db

test

Get the collection

venmo = db.getCollection('venmo')

test.venmo

Confirm that we have all the records

> venmo.count()

7076585

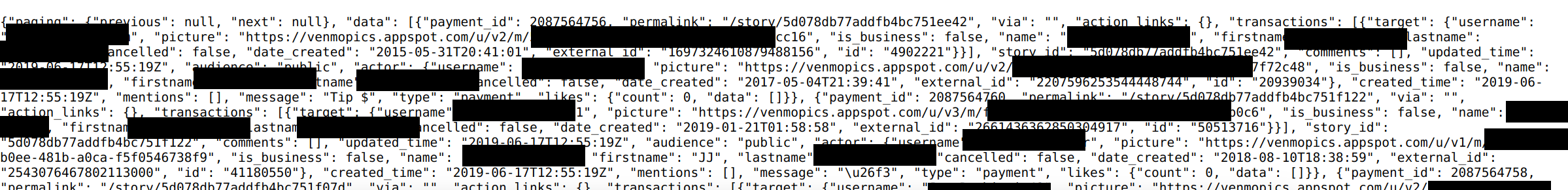

You can use “findOne” to see all the fields provided for each datum (shortened to hide information):

> venmo.findOne()

{

"_id" : ObjectId("5bb7bdce1bed297da9fcb11f"),

"mentions" : {

"count" : 0,

"data" : [ ]

},

...

"note" : "🍺",

"app" : {

"site_url" : null,

"id" : 1,

"description" : "Venmo for iPhone",

"image_url" : "https://venmo.s3.amazonaws.com/oauth/no-image-100x100.png",

"name" : "Venmo for iPhone"

},

"date_updated" : ISODate("2018-07-26T18:47:05Z"),

"transfer" : null

}

>

Finally, it’s time to query! By looking at the structure above, I can see that I’d likely want to find someone based on their name. Here is how you could do that.

> venmo.find({"payment.actor.last_name": "Roberts"})

The database is huge, so a query can take a few minutes. I’m not an expert with MongoDB, so I’m sure there are ways to index (and otherwise optimize queries) that I haven’t learned yet. I did figure out that it’s much easier to look at data (in an editor like vim) once it’s exported to json, and you can do that from the command line as follows:

> mongo test --eval "db.getCollection('venmo').find({'payment.target.last_name': 'Smith'}).toArray()" > smith.json

If you want to increase the size of the result allowed to be printed, add this to the front.

> mongo test --eval "DBQuery.shellBatchSize = 2000; ...`

Another interesting one is to query and search for interesting “notes,” which likely correspond to what the payment(s) were for.

> venmo.find({"note": "pancakes"}).count()

50

Wow! The good news is that although I did find names that were familiar, there wasn’t enough detail in any of the metadata to make me overly concerned. The notes for the transactions were actually quite funny, anything from silly spellings of a word, to an emoticon, to a heartfelt message about missing or caring for someone.

Summary

This data exploration gave me confidence that, although it sucks, the exports aren’t so terrible to cause huge harm to anyone’s life. It is still hugely wrong on the part of the service, and (if I used it) I wouldn’t continue to do so. I am tickled that 50 people had transactions related to pancakes. There is a silver lining in all things, it seems.

Suggested Citation:

Sochat, Vanessa. "Exploring MongoDB on HPC." @vsoch (blog), 22 Jun 2019, https://vsoch.github.io/2019/exploring-mongo/ (accessed 03 Jan 26).