-

Visualizations, Contain yourselves!

Visualizing things is really challenging. The reason is because it’s relatively easy to make a visualization that is too complex for what it’s trying to show, and it’s much harder to make a visualization catered for a specific analysis problem. Simplicity is usually the best strategy, but while standard plots (e.g., scatter, box and whisker, histogram) are probably ideal for publications, they aren’t particularly fun to think about. You also have the limitations of your medium and where you paint the picture. For example, a standard web browser will get slow when you try to render ~3000 points with D3. In these cases you are either trying to render too many, or you need a different strategy (e.g., render points on canvas in favor of number over interactivity).

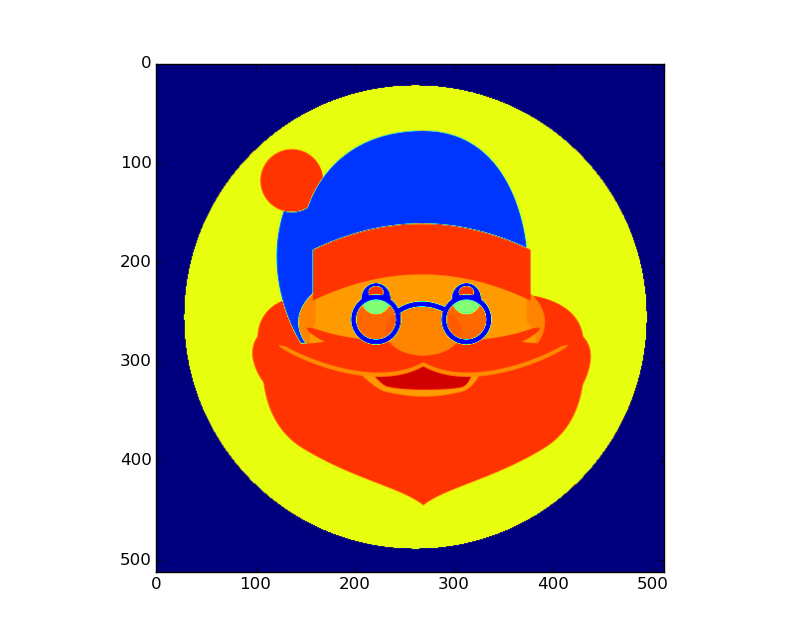

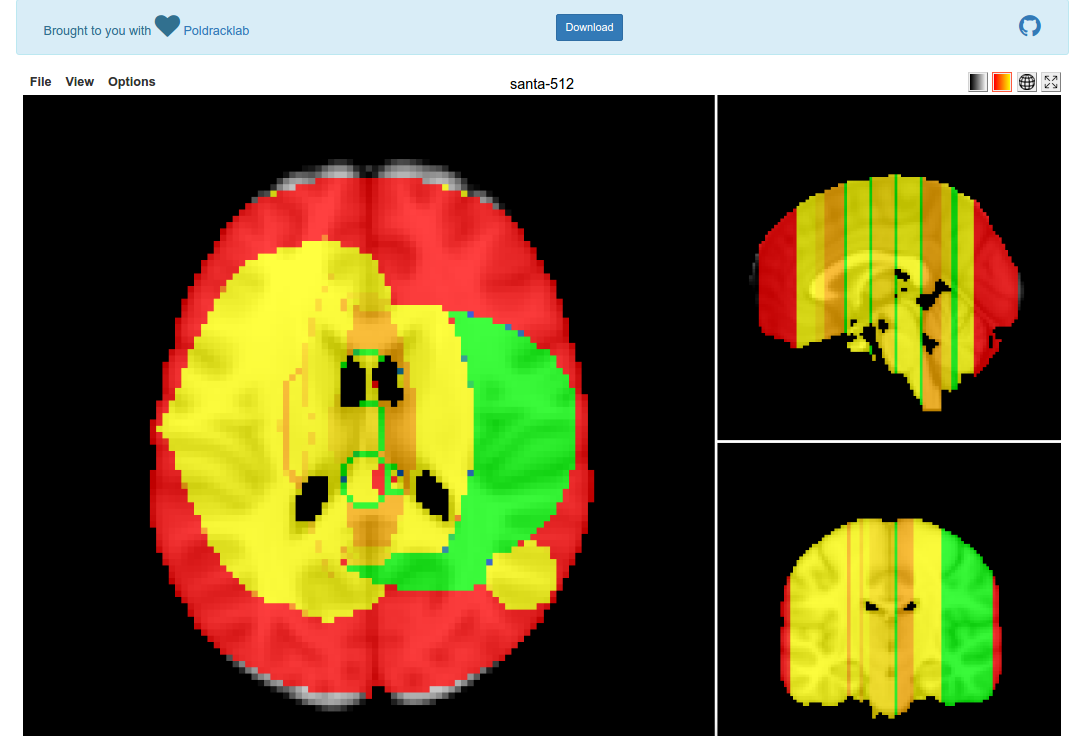

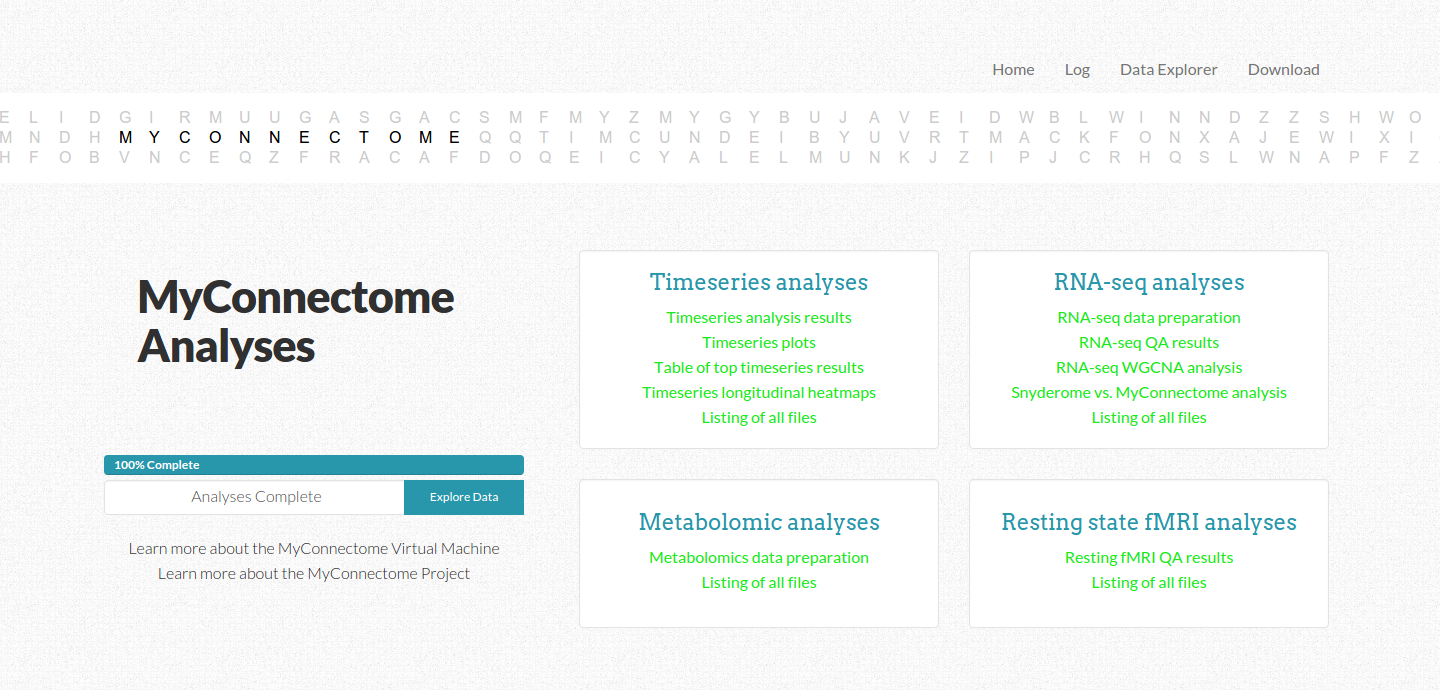

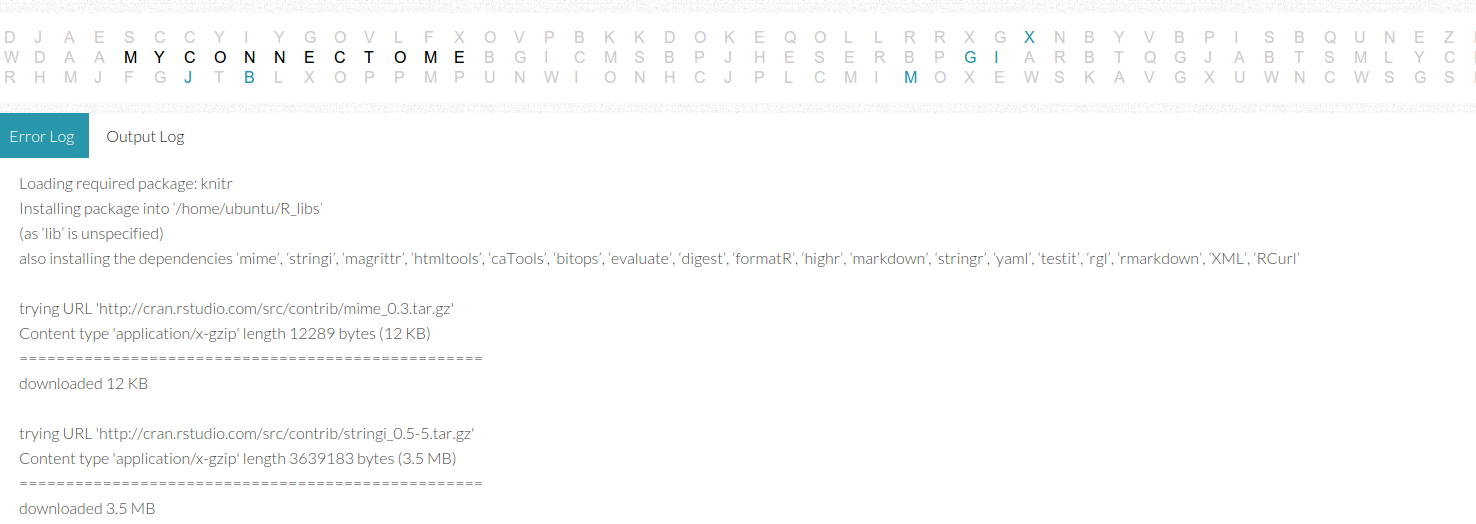

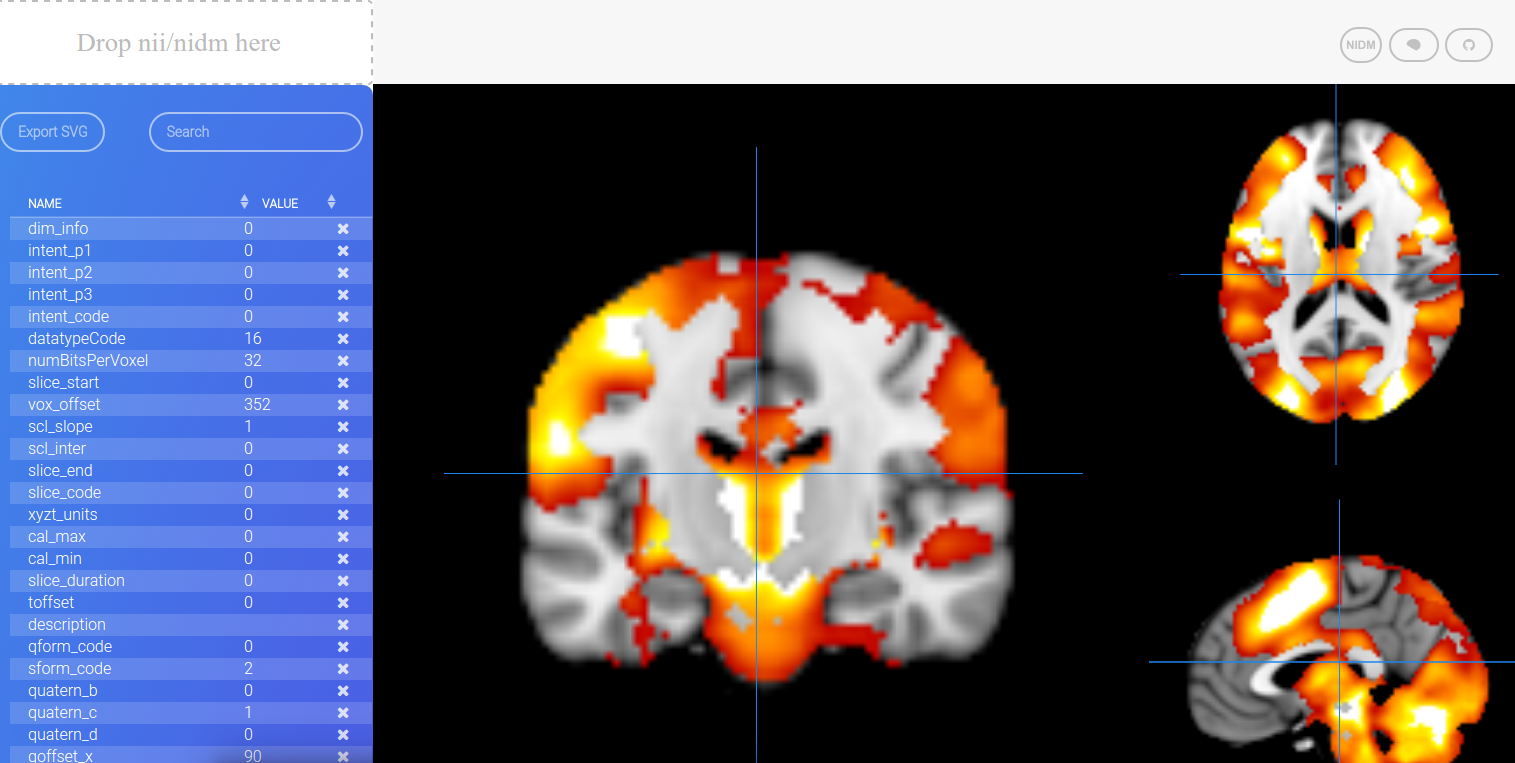

I recently embarked on a challenge to visualize a model defined at every voxel in the brain (a voxel is a little 3D cube of brain landscape associated with an X,Y,Z coordinate). Why would I want to do this? I won’t go into details here, but with such models you could predict what a statistical brain map might look like based on cognitive concepts, or predict a set of cognitive concepts from a brain map. This work is still being prepared for publication, but we needed a visualization because the diabolical Poldrack is giving a talk soon, and it would be nice to have some way to show output of the models we had been working on. TLDR: I made a few Flask applications and shoved them into Docker continers with all necessary data, and this post will review my thinking and design process. The visualizations are in no way “done” (whatever that means) because there are details and fixes remaining.

Step 1: How to cut the data

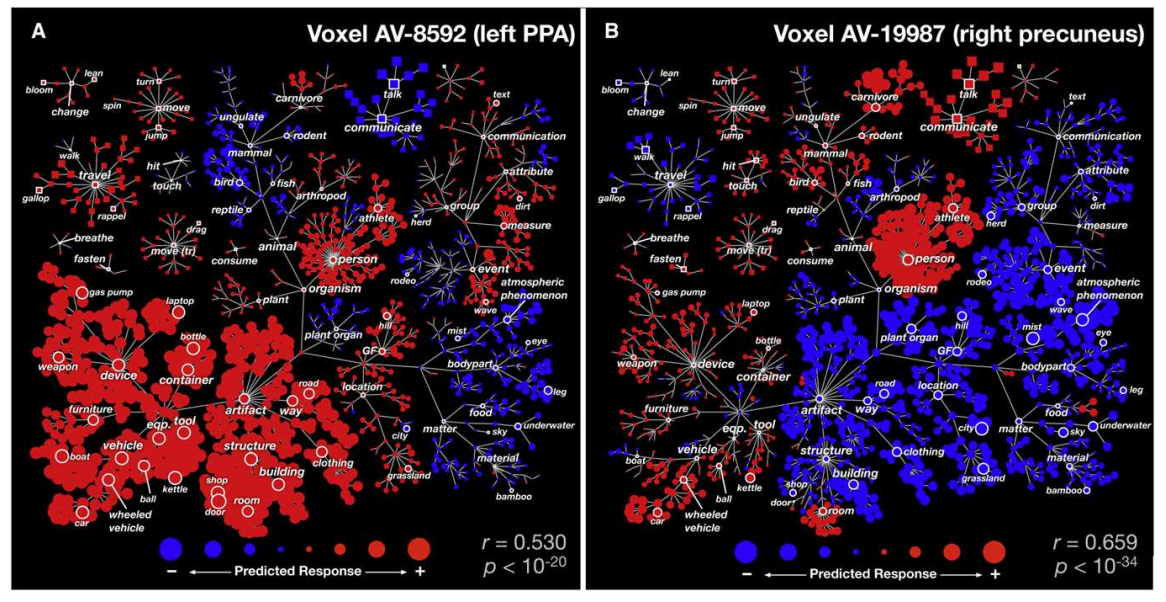

We have over 28K models, each built from a set of ~100 statistical brain maps (yes, tiny data) with 132 cognitive concepts from the Cognitive Atlas. When you think of the internet, it’s not such big data, but it’s still enough to make putting it in a single figure challenging. Master Poldrack had sent me a paper from the Gallant Lab, and directed me to Figure 2:

I had remembered this work from the HIVE at Stanford, and what I took away from it was the idea for the strategy. If we wanted to look at the entire model for a concept, that’s easy, look at the brain maps. If we want to understand all of those brain maps at one voxel, then the visualization needs to be voxel-specific. This is what I decided to do.

Step 2: Web framework

Python is awesome, and the trend for neuroimaging analysis tools is moving toward Python dominance. Thus, I decided to use a small web framework called Flask that makes data –> server –> web almost seamless. It takes a template approach, meaning that you write views for a python-based server to render, and they render using jinja2 templates. You can literally make a website in under 5 minutes.

Step 3: Data preparation

This turned out to be easy. I could generate either tab delimited or python pickled (think a compressed data object) files, and store them with the visualizations in their respective Github repos.

Regions from the AAL Atlas

At first, I generated views to render a specific voxel location, some number from 1..28K that corresponded with an X,Y,Z coordinate. The usability of this is terrible. Is someone really going to remember that voxel N corresponds to “somewhere in the right Amygdala?” Probably not. What I needed was a region lookup table. I wasn’t decided yet about how it would work, but I knew I needed to make it. First, let’s import some bread and butter functions!

import pandas import nibabel import requests import xmltodict from nilearn.image import resample_img from nilearn.plotting import find_xyz_cut_coordsThe

requestslibrary is important for getting anything from a URL into a python program.nilearnis a nice machine learning library for python (that I usually don’t use for machine learning at all, but rather the helper functions), andxmltodictwill do exactly that, convert an xml file into a superior data format :). First, we are going to use the Neurovault RESTApi to both obtain a nice brain map, and the labels from it. In the script to run this particular python script, we have already downloaded the brain map itself, and now we are going to load it, resample to a 4mm voxel (to match the data in our model), and then associate a label with each voxel:# OBTAIN AAL2 ATLAS FROM NEUROVAULT data = nibabel.load("AAL2_2.nii.gz") img4mm = nibabel.load("MNI152_T1_4mm_brain_mask.nii.gz") # Use nilearn to resample - nearest neighbor interpolation to maintain atlas aal4mm = resample_img(data,interpolation="nearest",target_affine=img4mm.get_affine()) # Get labels labels = numpy.unique(aal4mm.get_data()).tolist() # We don't want to keep 0 as a label labels.sort() labels.pop(0) # OBTAIN LABEL DESCRIPTIONS WITH NEUROVAULT API url = "http://neurovault.org/api/atlases/14255/?format=json" response = requests.get(url).json()We now have a json object with a nice path to the labels xml! Let’s get that file, convert it to a dictionary, and then parse away, Merrill.

# This is an xml file with label descriptions xml = requests.get(response["label_description_file"]) doc = xmltodict.parse(xml.text)["atlas"]["data"]["label"] # convert to a superior data structure :)Pandas is a module that makes nice data frames. You can think of it like a numpy matrix, but with nice row and column labels, and functions to sort and find things.

# We will store region voxel value, name, and a center coordinate regions = pandas.DataFrame(columns=["value","name","x","y","z"]) # Count is the row index, fill in data frame with region names and indices count = 0 for region in doc: regions.loc[count,"value"] = int(region["index"]) regions.loc[count,"name"] = region["name"] count+=1I didn’t actually use this in the visualization, but I thought it might be useful to store a “representative” coordinate for each region:

# USE NILEARN TO FIND REGION COORDINATES (the center of the largest activation connected component) for region in regions.iterrows(): label = region[1]["value"] roi = numpy.zeros(aal4mm.shape) roi[aal4mm.get_data()==label] = 1 nii = nibabel.Nifti1Image(roi,affine=aal4mm.get_affine()) x,y,z = [int(x) for x in find_xyz_cut_coords(nii)] regions.loc[region[0],["x","y","z"]] = [x,y,z]and then save the data to file, both the “representative” coords, and the entire aal atlas as a squashed vector, so we can easily associate the 28K voxel locations with regions.

# Save data to file for application regions.to_csv("../data/aal_4mm_region_coords.tsv",sep="\t") # We will also flatten the brain-masked imaging data into a vector, # so we can select a region x,y,z based on the name region_lookup = pandas.DataFrame(columns=["aal"]) region_lookup["aal"] = aal4mm.get_data()[img4mm.get_data()!=0] region_lookup.to_pickle("../data/aal_4mm_region_lookup.pkl")For this first visualization, that was all that was needed in the way of data prep. The rest of the files I already had on hand, nicely formatted, from the analysis code itself.

Step 4: First Attempt: Clustering

My first idea was to do a sort of “double clustering.” I scribbled the following into an email late one night:

…there are two things we want to show. 1) is relationships between concepts, specifically for that voxel. 2) is the relationship between different contrasts, and then how those contrasts are represented by the concepts. The first data that we have that is meaningful for the viewer are the tagged contrasts. For each contrast, we have two things: an actual voxel value from the map, and a similarity metric to all other contrasts (spatial and/or semantic). A simple visualization would produce some clustering to show to the viewer how the concepts are similar / different based on distance. The next data that we have “within” a voxel is information about concepts at that voxel (and this is where the model is integrated). Specifically - a vector of regression parameters for that single voxel. These regression parameter values are produced via the actual voxel values at the map (so we probably would not use both). What I think we want to do is have two clusterings - first cluster the concepts, and then within each concept bubble, show a smaller clustering of the images, clustered via similarity, and colored based on the actual value in the image (probably some shade of red or blue).

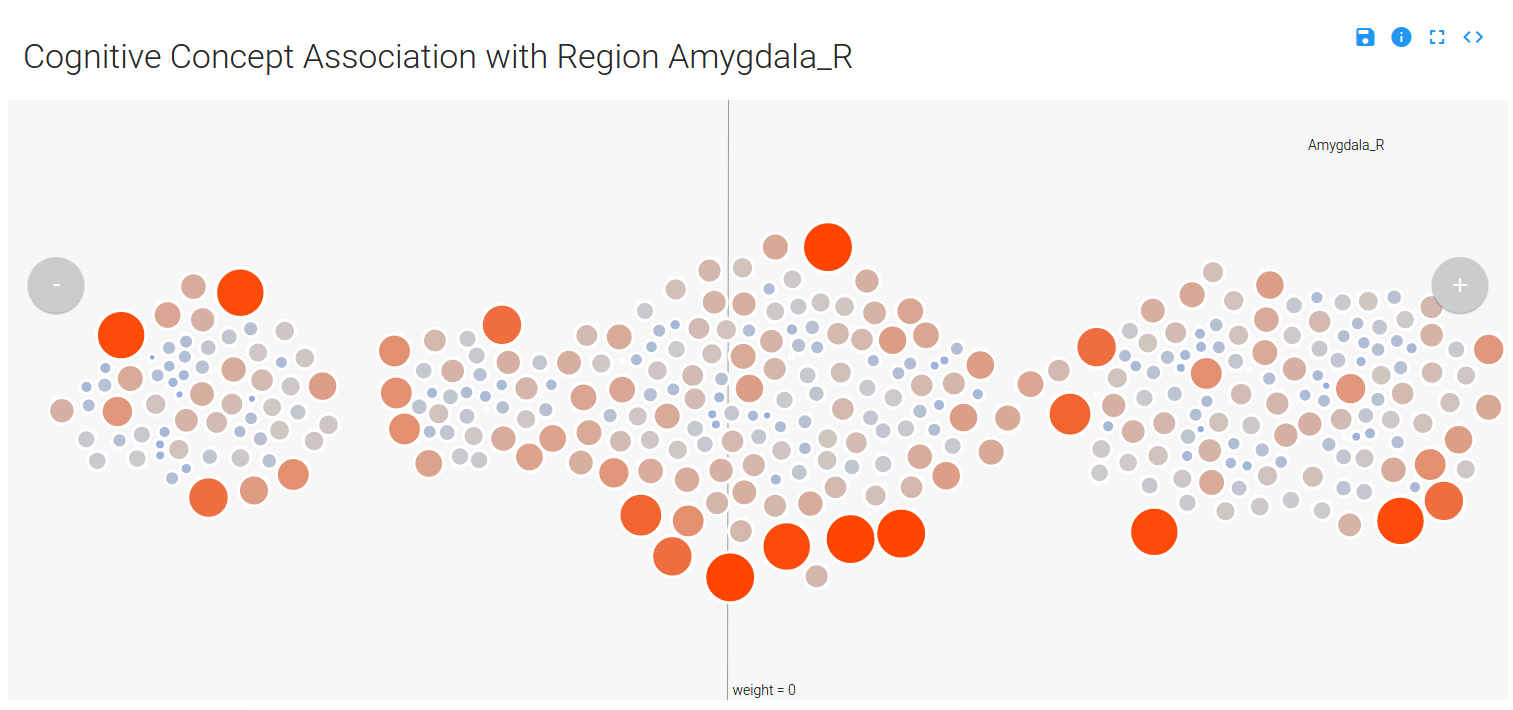

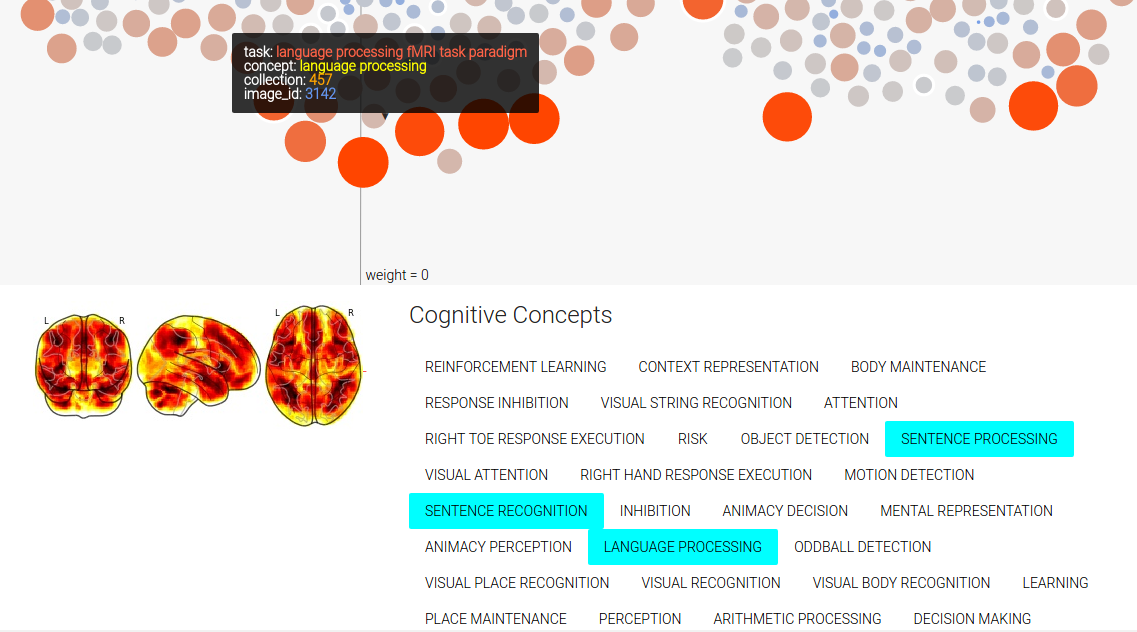

Yeah, please don’t read that. The summary is that I would show clusters of concepts, and within each concept cluster would be a cluster of images. Distance on the page, from left to right, would represent the contribution of the concept cluster to the model at the voxel. This turned out pretty cool:

You can mouse over a node, which is a contrast image (a brain map) associated with a particular cognitive concept, and see details (done by way of tipsy). Only concepts that have a weight (weight –> importance in the model) that is not zero are displayed (and this reduces the complexity of the visualization quite a bit), and the nodes are colored and sized based on their value in the original brain map (red/big –> positive, and blue/small –> negative):

You can use the controls in the top right to expand the image, save as SVG, link to the code, or read about the application:

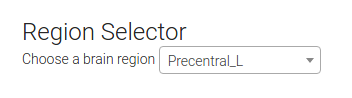

You can also select a region of choice from the dropdown menu, which uses select2 to complete your choice. At first I showed the user the voxel location I selected as “representative” for the region, but I soon realized that there were quite a few large regions in the AAL atlas, and that it would be incorrect and misleading to select a representative voxel. To embrace the variance within a region but still provide meaningful labels, I implemented it so that a user can select a region, and a random voxel from the region is selected:

... # Look up the value of the region value = app.regions.value[app.regions.name==name].tolist()[0] # Select a voxel coordinate at random voxel_idx = numpy.random.choice(app.region_lookup.index[app.region_lookup.aal == value],1)[0] return voxel(voxel_idx,name=name)Typically, Flask view functions return… views :). In this case, the view returned is the original one that I wrote (the function is called

voxel) to render a view based on a voxel id (from 1..28K). The user just sees a dropdown to select a region:

Finally, since there are multiple images tagged with the same concept in an image, you can mouse over a concept label to highlight those nodes in the image. You can also mouse over a concept label to highlight all the concepts associated with the image. We also obtain a sliced view of the image from NeuroVault to show to the user.

Step 5: Problems with First Attempt

I first thought it was a pretty OK job, until my extremely high-standard brain started to tell me how crappy it was. The first problem is that the same image is shown for every concept it’s relevant for, and that’s both redundant and confusing. It also makes no sense at all to be showing an entire brain map when the view is defined for just one voxel. What was I thinking?

The second problem is that the visualization isn’t intuitive. It’s a bunch of circles floating in space, and you have to read the “about” very careful to say “I think I sort of get it.” I tried to use meaningful things for color, size, and opacity, but it doesn’t give you really a sense of anything other than, maybe, magnetic balls floating in gray space.

I thought about this again. What a person really wants to know, quickly, are

1) which cognitive concepts are associated with the voxel?

2) How much?

3) How do the concepts relate in the ontology?I knew very quickly that the biggest missing component was some representation of the ontology. How was “recognition” related to “memory” ? Who knows! Let’s go back to the drawing table, but first, we need to prepare some new data.

Step 6: Generating a Cognitive Atlas Tree

A while back I added some functions to pybraincompare to generate d3 trees from ontologies, or anything you could represent with triples. Let’s do that with the concepts in our visualization to make a simple json structure that has nodes with children.

from pybraincompare.ontology.tree import named_ontology_tree_from_tsv from cognitiveatlas.datastructure import concept_node_triples import pickle import pandas import reFirst we will read in our images, and we only need to do this to get the image contrast labels (a contrast is a particular combination / subtraction of conditions in a task, like “looking at pictures of cats minus baseline”).

# Read in images metadata images = pandas.read_csv("../data/contrast_defined_images_filtered.tsv",sep="\t",index_col="image_id")The first thing we are going to do is generate a “triples data structure,” a simple format I came up with that would be simple for pybraincompare to understand that would allow it to render any kind of graph into the tree. It looks like this:

## STEP 1: GENERATE TRIPLES DATA STRUCTURE ''' id parent name 1 none BASE # there is always a base node 2 1 MEMORY # high level concept groups 3 1 PERCEPTION 4 2 WORKING MEMORY # concepts 5 2 LONG TERM MEMORY 6 4 image1.nii.gz # associated images (discovered by way of contrasts) 7 4 image2.nii.gz '''Each node has an id, a parent, and a name. For the next step, I found the unique contrasts represented in the data (we have more than one image for contrasts), and then made a lookup to find sets of images based on the contrast.

# We need a dictionary to look up image lists by contrast ids unique_contrasts = images.cognitive_contrast_cogatlas_id.unique().tolist() # Images that do not match the correct identifier will not be used (eg, "Other") expression = re.compile("cnt_*") unique_contrasts = [u for u in unique_contrasts if expression.match(u)] image_lookup = dict() for u in unique_contrasts: image_lookup[u] = images.index[images.cognitive_contrast_cogatlas_id==u].tolist()To make the table I showed above, I had added a function to the Cognitive Atlas API python wrapper called concept_node_triples.

output_triples_file = "../data/concepts.tsv" # Create a data structure of tasks and contrasts for our analysis relationship_table = concept_node_triples(image_dict=image_lookup,output_file=output_triples_file)The function includes the contrast images themselves as nodes, so let’s remove them from the data frame before we generate and save the JSON object that will render into a tree:

# We don't want to keep the images on the tree keep_nodes = [x for x in relationship_table.id.tolist() if not re.search("node_",x)] relationship_table = relationship_table[relationship_table.id.isin(keep_nodes)] tree = named_ontology_tree_from_tsv(relationship_table,output_json=None) pickle.dump(tree,open("../data/concepts.pkl","w")) json.dump(tree,open("../static/concepts.json",'w'))Boum! Ok, now back to the visualization!

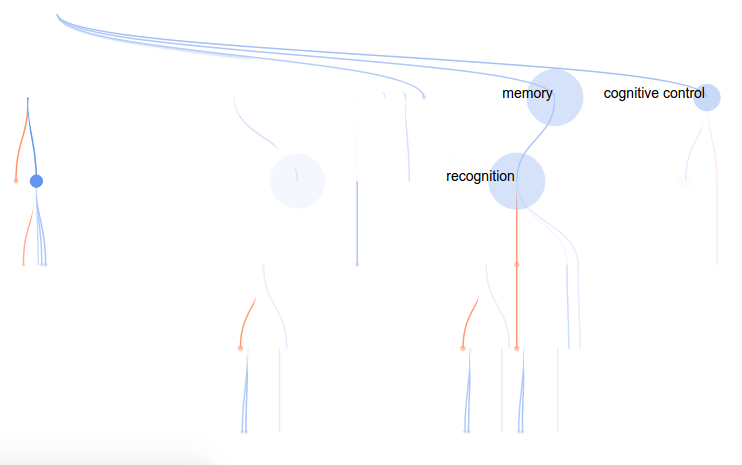

Step 7: Second Attempt: Tree

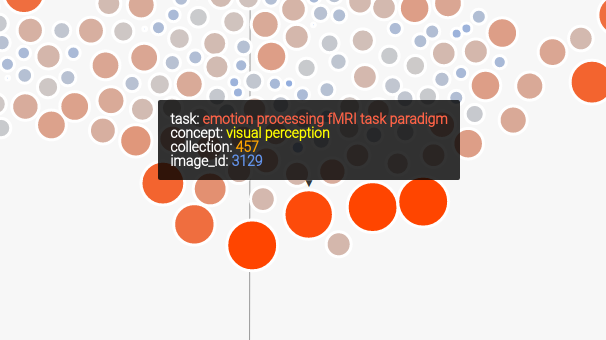

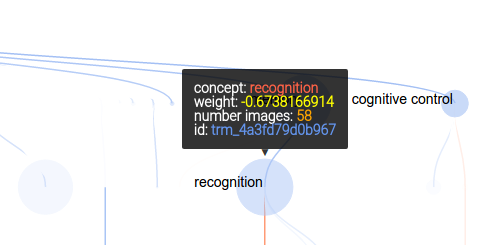

For this attempt, I wanted to render a concept tree in the browser, with each node in the tree corresponding to a cognitive concept, and colored by the “importance” (weight) in the model. As before, red would indicate positive weight, and blue negative (this is a standard in brain imaging, by the way). To highlight the concepts that are relevant for the particular voxel model, I decided to make the weaker nodes more transparent, and nodes with no contribution (weight = 0) completely invisible. However, I would maintain the tree structure to give the viewer a sense of distance in the ontology (distance –> similarity). This tree would also solve the problem of understanding relationships between concepts. They are connected!

As before, mousing over a node provides more information:

and the controls are updated slightly to include a “find in page” button:

Which, when you click on it, brings up an overlay where you can select any cogntiive concepts of your choice with clicks, and they will light up on the tree!

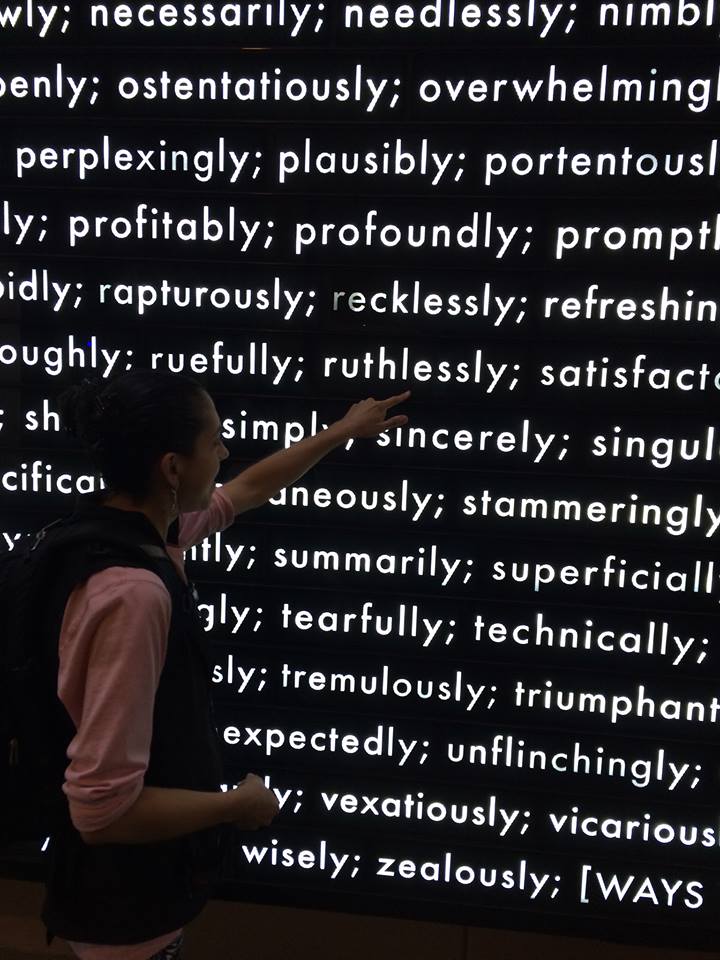

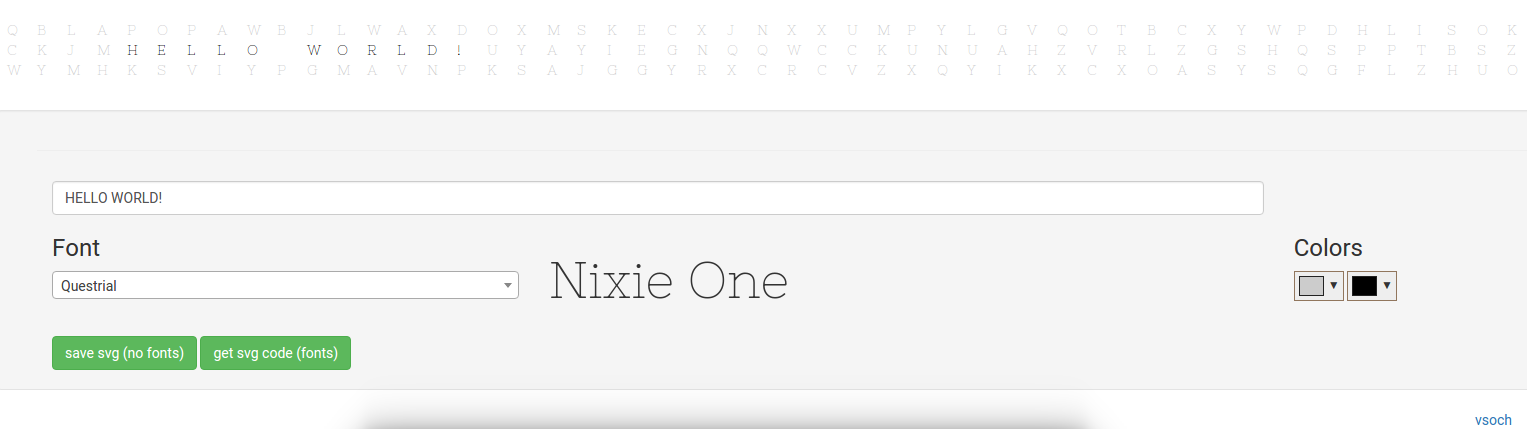

If you want to know the inspiration for this view, it’s a beautiful installation at the Stanford Business School that I’m very fond of:

The labels were troublesome, because if I rendered too many it was cluttered and unreadable, and if I rendered too few it wasn’t easy to see what you were looking at without mousing over things. I found a rough function that helped a bit, but my quick fix was to simply limit the labels shown based on the number of images (count) and the regression parameter weight:

// Add concept labels var labels = node.append("text") .attr("dx", function (d) { return d.children ? -2 : 2; }) .attr("dy", 0) .classed("concept-label",true) .style("font","14px sans-serif") .style("text-anchor", function (d) { return d.children ? "end" : "start"; }) .html(function(d) { // Only show label for larger nodes with regression parameter >= +/- 0.5 if ((counts[d.nid]>=15) && (Math.abs(regparams[d.nid])>=0.5)) { return d.name } });Step 8: Make it reproducible

You can clone the repo on your local machine and run the visualization with native Flask:

git clone https://github.com/vsoch/cogatvoxel cd cogatvoxel python index.pyNotice anything missing? Yeah, how about installing dependencies, and what if the version of python you are running isn’t the one I developed it in? Eww. The easy answer is to Dockerize! It was relatively easy to do, I would use docker-compose to grab an nginx (web server) image, and my image vanessa/cogatvoxeltree built on Docker Hub. The Docker Hub image is built from the Dockerfile in the repo, which installs dependencies, maps the code to a folder in the container called

/codeand then exposes port 8000 for Flask:FROM python:2.7 ENV PYTHONUNBUFFERED 1 RUN apt-get update && apt-get install -y \ libopenblas-dev \ gfortran \ libhdf5-dev \ libgeos-dev MAINTAINER Vanessa Sochat RUN pip install --upgrade pip RUN pip install flask RUN pip install numpy RUN pip install gunicorn RUN pip install pandas ADD . /code WORKDIR /code EXPOSE 8000Then the docker-compose file uses this image, along with the nginx web server (this is pronounced “engine-x” and I’ll admit it took me probably 5 years to figure that out).

web: image: vanessa/cogatvoxeltree restart: always expose: - "8000" volumes: - /code/static command: /usr/local/bin/gunicorn -w 2 -b :8000 index:app nginx: image: nginx restart: always ports: - "80:80" volumes: - /www/static volumes_from: - web links: - web:webIt’s probably redundant to again expose port 8000 in my application (the top one called “web”), and add

/www/staticto the web server static. To make things easy, I decided to use gunicorn to manage serving the application. There are many ways to skin a cat, there are ways to run a web server… I hope you choose web servers over skinning cats.That’s about it. It’s a set of simple Flask applications to render data into a visualization, and it’s containerized. To be honest, I think the first is a lot cooler, but the second is on its way to a better visualization for the problem at hand. There is still a list of things that need fixing and tweaking (for example, not giving the user control over the threshold for showing the node and links is not ok), but I’m much happier with this second go. On that note, I’ll send a cry for reproducibility out to all possible renderings of data in a browser…

·Visualizations, contain yourselves!

-

Wordfish: tool for standard corpus and terminology extraction

If pulling a thread of meaning from woven text

is that which your heart does wish.

Not so absurd or seemingly complex,

if you befriend a tiny word fish.

I developed a simple tool for standard extraction of terminology and corpus, Wordfish, that is easily deployed to a cluster environment. I’m graduating (hopefully, tentatively, who knows) soon, and because publication is unlikely, I will write about the tool here, in the case it is useful to anyone. I did this project for fun, mostly because I found DeepDive to be overly complicated for my personal goal of extracting a simple set of terms from a corpus in the case that I couldn’t define relationships apriori (I wanted to learn them from the data). Thus I used neural networks (word2vec) to learn term relationships based on their context. I was able to predict reddit boards for different mental illness terms with high accuracy, and it sort of ended there because I couldn’t come up with a good application in Cognitive Neuroscience, and no “real” paper is going to write about predicting reddit boards. I was sad to not publish something, but then I realized I am empowered to write things on the internet. :) Not only that, I can make up my own rules. I don’t have to write robust methods with words, I will just show and link you to code. I might even just use bulletpoints instead of paragraphs. For results, I’ll link to ipython notebooks. I’m going to skip over the long prose and trust that if you want to look something up, you know how to use Google and Wikipedia. I will discuss the tool generally, and show an example of how it works. First, an aside about publication in general - feel free to skip this if you aren’t interested in discussing the squeaky academic machine.

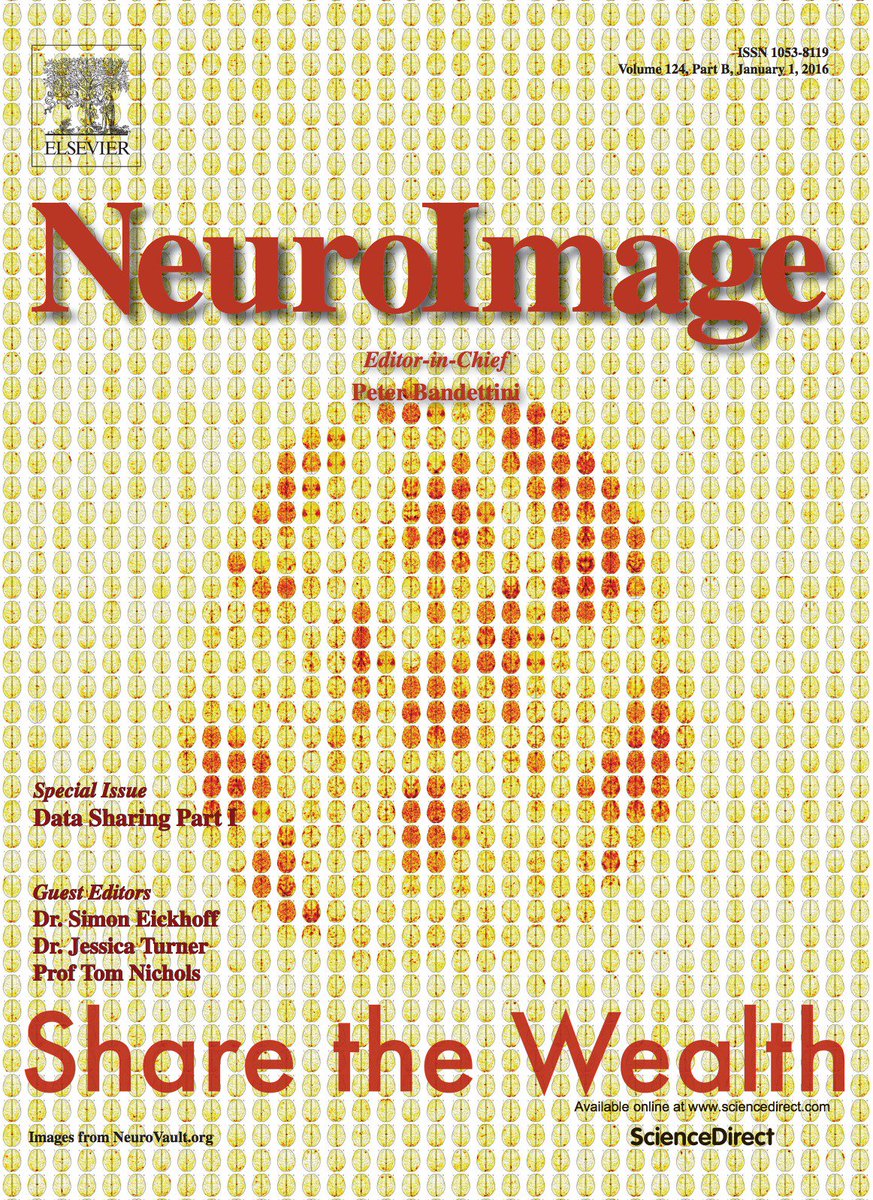

Why sharing incomplete methods can be better than publication

It’s annoying that there is not a good avenue, or even more so, that it’s not desired or acceptable, to share a simple (maybe even incomplete) method or tool that could be useful to others in a different context. Publication requires the meaningful application. It’s annoying that, as researchers, we salivate for these “publication” things when the harsh reality is that this slow, inefficient process results in yet another PDF/printed thing with words on a page, offering some rosy description of an analysis and result (for which typically minimal code or data is shared) that makes claims that are over-extravagant in order to be sexy enough for publication in the first place (I’ve done quite a bit of this myself). A publication is a static thing that, at best, gets cited as evidence by another paper (and likely the person making the citation did not read the paper to full justice). Maybe it gets parsed from pubmed in someone’s meta analysis to try and “uncover” underlying signal across many publications that could have been transparently revealed in some data structure in the first place. Is this workflow really empowering others to collaboratively develop better methods and tools? I think not. Given the lack of transparency, I’m coming to realize that it’s much faster to just share things early. I don’t have a meaningful biological application. I don’t make claims that this is better than anything else. This is not peer reviewed by some three random people that gives it a blessing like from a rabbi. I understand the reasons for these things, but the process of conducting research, namely hiding code and results toward that golden nugget publication PDF, seems so against a vision of open science. Under this context, I present Wordfish.

Wordfish: tool for standard corpus and terminology extraction

Abstract

The extraction of entities and relationships between them from text is becoming common practice. The availability of numerous application program interfaces (API) to extract text from social networks, blogging platforms and feeds, standard sources of knowledge is continually expanding, offering an extensive and sometimes overwhelming source of data for the research scientist. While large corporations might have exclusive access to data and robust pipelines for easily obtaining the data, the individual researcher is typically granted limited access, and commonly must devote substantial amounts of time to writing extraction pipelines. Unfortunately, these pipelines are usually not extendable beyond the dissemination of any result, and the process is inefficiently repeated. Here I present Wordfish, a tiny but powerful tool for the extraction of corpus and terms from publicly available sources. Wordfish brings standardization to the definition and extraction of terminology sources, providing an open source repository for developers to write plugins to extend their specific terminologies and corpus to the framework, and research scientists an easy way to select from these corpus and terminologies to perform extractions and drive custom analysis pipelines. To demonstrate the utility of this tool, I use Wordfish in a common research framework: classification. I first build deep learning models to predict Reddit boards from post content with high accuracy. I hope that a tool like Wordfish can be extended to include substantial plugins, and can allow easier access to ample sources of textual content for the researcher, and a standard workflow for developers to add a new terminology or corpus source.

Introduction

While there is much talk of “big data,” when you peek over your shoulder and look at your colleague’s dataset, there is a pretty good chance that it is small or medium sized. When I wanted to extract terms and relationships from text, I went to DeepDive, the ultimate powerhouse to do this. However, I found that setting up a simple pipeline required database and programming expertise. I have this expertise, but it was tenuous. I thought that it should be easy to do some kind of NLP analysis, and combine across different corpus sources. When I started to think about it, we tend to reuse the same terminologies (eg, an ontology) and corpus (pubmed, reddit, wikipedia, etc), so why not implement an extraction once, and then provide that code for others? This general idea would make a strong distinction between a developer, meaning an individual best suited to write the extraction pipeline, and the researcher, an individual best suited to use it for analysis. This sets up the goals of Wordfish: to extract terms from a corpus, and then do some higher level analysis, and make it standardized and easy.

Wordfish includes data structures that can capture an input corpus or terminology, and provides methods for retrieval and extraction. Then, it allows researchers to create applications that interactively select from the available corpus and terminologies, deploy the applications in a cluster environment, and run an analysis. This basic workflow is possible and executable without needing to set up an entire infrastructure and re-writing the same extraction scripts that have been written a million times before.

Methods

The overall idea behind the infrastructure of wordfish is to provide terminologies, corpus, and an application for working with them in a modular fashion. This means that Wordfish includes two things, wordfish-plugins and wordfish-python. Wordfish plugins are modular folders, each of which provides a standard data structure to define extraction of a corpus, terminology or both. Wordfish python is a simple python module for generating an application, and then deploying the application on a server to run analyses.

Wordfish Plugins

A wordfish plugin is simply a folder with typically two things: a functions.py file to define functions for extraction, and a config.json that is read by wordfish-python to deploy the application. We can look at the structure of a typical plugin:

plugin functions.py __init__.py config.jsonSpecifically, the functions.py has the following functions:

1) extract_terms: function to call to return a data structure of terms

2) extract_text: function to call to return a data structure of text (corpus)

3) extract_relations: function to call to return a data structure of relations

4) functions.py: is the file in the plugin folder to store these functionsThe requirement of every

functions.pyis an import of general functions fromwordfish-pythonthat will save a data structure for a corpus, terminology, or relationships:# IMPORTS FOR ALL PLUGINS from wordfish.corpus import save_sentences from wordfish.terms import save_terms from wordfish.terms import save_relations from wordfish.plugin import generate_jobThe second requirement is a function,

go_fish, which is the main function to be called bywordfish-pythonunder the hood. In this function, the user writing the plugin can make as many calls togenerate_jobas necessary. A call to generate job means that a slurm job file will be written to run a particular function (func) with a specified category or extraction type (e.g.,terms,corpus, orrelations). This second argument helps the application determine how to save the data. Ago_fishfunction might look like this:# REQUIRED WORDFISH FUNCTION def go_fish(): generate_job(func="extract_terms",category="terms") generate_job(func="extract_relations",category="relations")The above will generate slurm job files to be run to extract terms and relations. Given input arguments are required for the function, the specification can look as follows:

generate_job(func="extract_relations",inputs={"terms":terms,"maps_dir":maps_dir},category="relations",batch_num=100)where

inputsis a dictionary of keys being variable names, values being the variable value. The addition of thebatch_numvariable also tells the application to split the extraction into a certain number of batches, corresponding to SLURM jobs. This is needed in the case that running a node on a cluster is limited to some amount of time, and the user wants to further parallelize the extraction.Extract terms

Now we can look at more detail at the

extract_termsfunction. For example, here is this function for the cognitive atlas. Theextract_termswill return a json structure of termsdef extract_terms(output_dir): terms = get_terms() save_terms(terms,output_dir=output_dir)You will notice that the

extract_termsfunction uses another function that is defined infunctions.py,get_terms. The user is free to include in thewordfish-pluginfolder any number of additional files or functions that assist toward the extraction. Here is whatget_termslooks like:def get_terms(): terms = dict() concepts = get_concepts() for c in range(len(concepts)): concept_id = concepts[c]["id"] meta = {"name":concepts[c]["name"], "definition":concepts[c]["definition_text"]} terms[concept_id] = meta return termsThis example is again from the Cognitive Atlas, and we are parsing cognitive ceoncepts into a dictionary of terms. For each cognitive concept, we are preparing a dictionary (JSON data structure) with fields

name, anddefinition.We then put that into another dictionarytermswith the key as the unique id. This unique id is important in that it will be used to link between term and relations definitions. You can assume that the other functions (e.g.,get_conceptsare defined in thefunctions.pyfile.Extract relations

For

extract_relationswe return a tuple of the format(term1_id,term2_id,relationship):def extract_relations(output_dir): links = [] terms = get_terms() concepts = get_concepts() for concept in concepts: if "relationships" in concept: for relation in concept["relationships"]: relationship = "%s,%s" %(relation["direction"],relation["relationship"]) tup = (concept["id"],relation["id"],relationship) links.append(tup) save_relations(terms,output_dir=output_dir,relationships=links)Extract text

Finally,

extract_textreturns a data structure with some unique id and a blob of text. Wordfish will parse and clean up the text. The data structure for a single article is again, just JSON:corpus[unique_id] = {"text":text,"labels":labels}Fields include the actual text, and any associated labels that are important for classification later. The corpus (a dictionary of these data structures) gets passed to

save_sentencessave_sentences(corpus_input,output_dir=output_dir)More detail is provided in the wordfish-plugin README

The plugin controller: config.json

The plugin is understood by the application by way of a folder’s

config.json, which might look like the following:[ { "name": "NeuroSynth Database", "tag": "neurosynth", "corpus": "True", "terms": "True", "labels": "True", "relationships":"True", "dependencies": { "python": [ "neurosynth", "pandas" ], "plugins": ["pubmed"] }, "arguments": { "corpus":"email" }, "contributors": ["Vanessa Sochat"], "doi": "10.1038/nmeth.1635", } ]1) name: a human readable description of the plugin

2) tag: a term (no spaces or special characters) that corresponds with the folder name in the plugins directory. This is a unique id for the plugin.

3) corpus/terms/relationships: boolean, each “True” or “False” should indicate if the plugin can return a corpus (text to be parsed by wordfish) or terms (a vocabulary to be used to find mentions of things), or relations (relationships between terms). This is used to parse current plugins available for each purpose, to show to the user.

4) dependencies: should include “python” and “plugins.”Pythoncorresponds to python packages that are dependencies, and these plugins are installed by the overall application.Pluginsrefers to other plugins that are required, such as pubmed. This is an example of a plugin that does not offer to extract a specific corpus, terminology, or relations, but can be included in an application for other plugins to use. In the example above, the neurosynth plugin requires retrieving articles from pubmed, so the plugin develop specifies needing pubmed as a plugin dependency.

5) arguments: a dictionary with (optionally) corpus and/or terms. The user will be asked for these arguments to run theextract_textandextract_termsfunctions.

6) contributors: a name/orcid ID or email of researchers responsible for creation of the plugins. This is for future help and debugging.

7) doi: a reference or publication associated with the resource. Needed if it’s important for the creator of the plugin to ascribe credit to some published work.Best practices for writing plugins

Given that a developer is writing a plugin, it is generally good practice to print to the screen what is going on, and how long it might take, as a courtesy to the user, if something needs review or debugging.

“Extracting relationships, will take approximately 3 minutes”

The developer should also use clear variable names, well documented and spaced functions (one liners are great in python, but it’s more understandable by the reader if to write out a loop sometimes), and attribute function to code that is not his. Generally, the developer should just follow good practice as a coder and human being.

Functions provided by Wordfish

While most users and clusters have internet connectivity, it cannot be assumed, and an error in attempting to access an online resource could trigger an error. If a plugin has functions that require connectivity, Wordfish provides a function to check:

from wordfish.utils import has_internet_connectivity if has_internet_connectivity(): # Do analysisIf the developer needs a github repo, Wordfish has a function for that:

from wordfish.vm import download_repo repo_directory = download_repo(repo_url="https://github.com/neurosynth/neurosynth-data")If the developer needs a general temporary place to put things,

tempfileis recommended:import tempfile tmpdir = tempfile.mkdtemp()Wordfish has other useful functions for downloading data, or obtaining a url. For example:

from wordfish.utils import get_url, get_json from wordfish.standards.xml.functions import get_xml_url myjson = get_json(url) webpage = get_url(url) xml = get_xml_url(url)Custom Applications with Wordfish Python

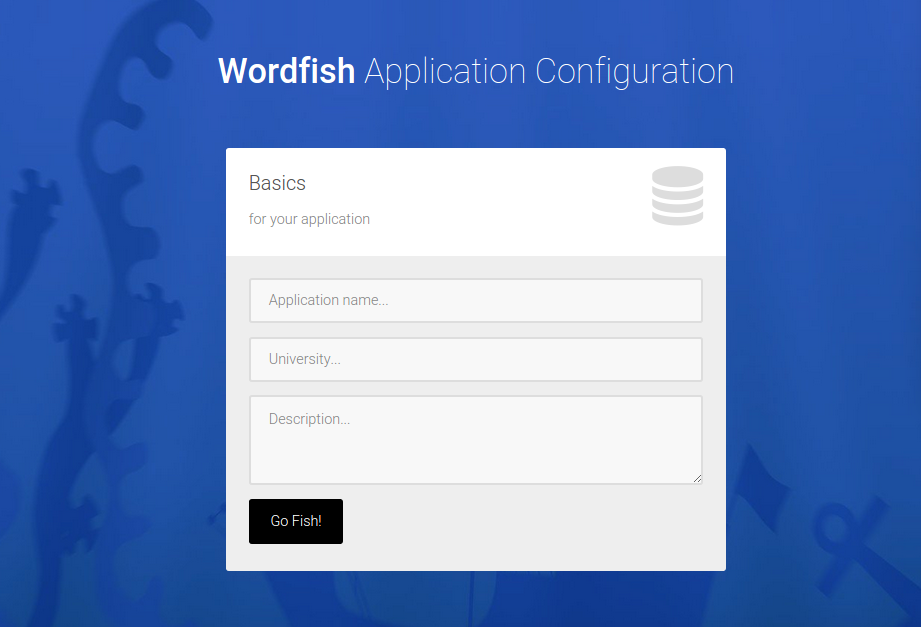

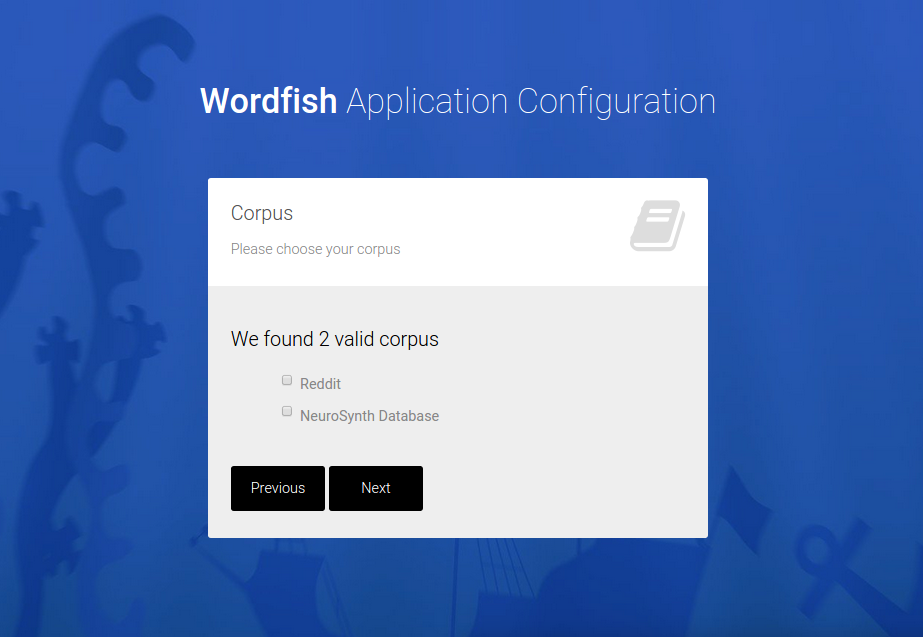

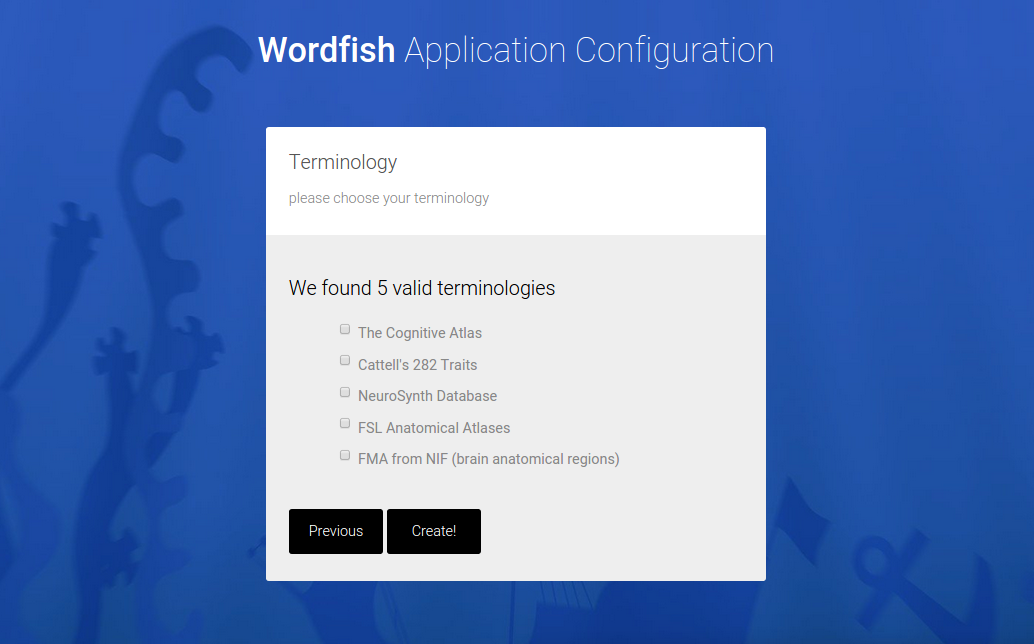

The controller, wordfish-python is a flask application that provides the user (who is just wanting to generate an application) with an interactive web interface for doing so. It is summarized nicely in the README:

Choose your input corpus, terminologies, and deployment environment, and an application will be generated to use deep learning to extract features for text, and then entities can be mapped onto those features to discover relationships and classify new texty things. Custom plugins will allow for dynamic generation of corpus and terminologies from data structures and standards of choice from wordfish-plugins You can have experience with coding (and use the functions in the module as you wish), or no experience at all, and let the interactive web interface walk you through generation of your application.

Installation can be done via github or pip:

pip install git+git://github.com/word-fish/wordfish-python.git pip install wordfishAnd then the tool is called to open up a web interface to generate the application:

wordfish

The user then selects terminologies and corpus.

And a custom application is generated, downloaded as a zip file in the browser. A “custom application” means a folder that can be dropped into a cluster environment, and run to generate the analysis,

Installing in your cluster environment

The user can drop the folder into a home directory of the cluster environment, and run the install script to install the package itself, and generate the output folder structure. The only argument that is needed is to supply is the base of the output directory:

WORK=/scratch/users/vsochat/wordfish bash install.sh $WORKAll scripts for the user to run are in the scripts folder here:

cd $WORK/scriptsEach of these files corresponds to a step in the pipeline, and is simply a list of commands to be run in parallel. The user can use launch, or submit each command to a SLURM cluster. A basic script is provided to help submit jobs to a SLURM cluster, and this could be tweaked to work with other clusters (e.g., SGE).

Running the Pipeline

After the installation of the custom application is complete, this install script simply runs

run.py, which generates all output folders and running scripts. the user has a few options for running:1) submit the commands in serial, locally. The user can run a job file with bash,

bash run_extraction_relationships.job

2) submit the commands to a launch cluster, something likelaunch -s run_extraction_relationships.job

3) submit the commands individually to a slurm cluster. This will mean reading in the file, and submitting each script with a line likesbatch -p normal -j myjob.job [command line here]Output structure

The jobs are going to generate output to fill in the following file structure in the project base folder, which again is defined as an environmental variable when the application is installed (files that will eventually be produced are shown):

WORK corpus corpus1 12345_sentences.txt 12346_sentences.txt corpus2 12345_sentences.txt 12346_sentences.txt terms terms1_terms.txt terms2_relationships.txt scripts run_extraction_corpus.job run_extraction_relationships.job run_extraction_terms.jobThe folders are generated dynamically by the

run.pyscript for each corpus and terms plugin based on thetagvariable in the plugin’sconfig.json. Relationships, by way of being associated with terms, are stored in the equivalent folder, and the process is only separate because it is not the case that all plugins for terms can have relationships defined. The corpus are kept separate at this step as the output has not been parsed into any standard unique id space. Wordfish currently does not do this, but if more sophisticated applications are desired (for example with a relational database), this would be a good strategy to take.Analysis

Once the user has files for corpus and terms, he could arguably do whatever he pleases with them. However, I found the word2vec neural network to be incredibly easy and cool, and have provided a simple analysis pipeline to use it. This example will merge all terms and corpus into a common framework, and then show examples of how to do basic comparisons, and vector extraction (custom analyses scripts can be based off of this). We will do the following:

1) Merge terms and relationships into a common corpus

2) For all text extract features with deep learning (word2vec)

3) Build classifiers to predict labels from vectorsWord2Vec Algorithm

First, what is a word2vec model? Generally, Word2Vec is a neural network implementation that will allow us to learn word embeddings from text, specifically a matrix of words by some N features that best predict the neighboring words for each term. This is an interesting way to model a text corpus because it’s not about occurrence, but rather context, of words, and we can do something like compare a term “anxiety” in different contexts. If you want equations, see this paper.

The problem Wordfish solves

Wordfish currently implements Word2Vec. Word2Vec is an unsupervised model. Applications like DeepDive take the approach that a researcher knows what he or she is looking for, requiring definition of entities as first step before their extraction from a corpus. This is not ideal given that a researcher has no idea about these relationships, or lacks either positive or negative training examples. In terms of computational requirements, Deepdive also has some that are unrealistic. For example, using the Stanford Parser is required to determine parts of speech and perform named entity recognition. While this approach is suitable for a large scale operation to mine very specific relationships between well-defined entities in a corpus, for the single researcher that wants to do simpler natural language processing, and perhaps doesn’t know what kind of relationships or entities to look for, it is too much. This researcher may want to search for some terms of interest across a few social media sources, and build models to predict one type of text content from another. The researcher may want to extract relationships between terms without having a good sense of what they are to begin with, and definition of entities, relationships, and then writing scripts to extract both should not be a requirement. While it is reasonable to ask modern day data scientists to partake in small amounts of programming, substantial setting up of databases and writing extraction pipelines should not be a requirement. A different approach that is taken by Wordfish is to provide plugins for the user to interactively select corpus and terminology, deploy their custom application in their computational environment of choice, and perform extraction using the tools that are part of their normal workflows, which might be a local command line or computing cluster.

When the DeepDive approach makes sense, the reality is that setting up the infrastructure to deploy DeepDive is really hard. When we think about it, the two applications are solving entirely different problems. All we really want to do is discover how terms are related in text. We can probably do ok to give DeepDive a list of terms, but then to have to “know” something about the features we want to extract, and have positive and negative cases for training is really annoying. If it’s annoying for a very simple toy analysis (finding relationships between cognitive concepts) I can’t even imagine how that annoyingness will scale when there are multiple terminologies to consider, different relationships between the terms, and a complete lack of positive and negative examples to validate. This is why I created Wordfish, because I wanted an unsupervised approach that required minimal set up to get to the result. Let’s talk a little more about the history of Word2Vec from this paper.

The N-Gram Model

The N-gram model (I think) is a form of hidden Markov Model where we model the P(word) given the words that came before it. The authors note that N-gram models work very well for large data, but in the case of smaller datasets, more complex methods can make up for it. However, it follows logically that a more complex model on a large dataset gives us the best of all possible worlds. Thus, people started using neural networks for these models instead.

simple models trained on huge amounts of data outperform complex systems trained on less data.

The high level idea is that we are going to use neural networks to represent words as vectors, word “embeddings.” Training is done with stochastic gradient descent and backpropagation.

How do we assess the quality of word vectors?

Similar words tend to be close together, and given a high dimensional vector space, multiple representations/relationships can be learned between words (see top of page 4). We can also perform algebraic computations on vectors and discover cool relationships, for example, the vector for V(King) - V(Man) + V(Woman) is close to V(Queen). The most common metric to compare vectors seems to be cosine distance. The interesting thing about this approach reported here is that by combining individual word vectors we can easily represent phrases, and learn new interesting relationships.

Two different algorithm options

You can implement a continuous bag of words (CBOW) or skip-gram model: 1) CBOW: predicts the word given the context (other words)

2) skip-gram: predicts other words (context) given a word (this seems more useful for what we want to do)They are kind of like inverses of one another, and the best way to show this is with a picture:

Discarding Frequent Words

The paper notes that having frequent words in text is not useful, and that during training, frequent words are discarded with a particular probability based on the frequency. They use this probability in a sampling procedure when choosing words to train on so the more frequent words are less likely to be chosen. For more details, see here, and search Google.

Building Word2Vec Models

First, we will train a simple word2vec model with different corpus. And to do this we can import functions from Wordfish, which is installed by the application we generated above.

from wordfish.analysis import build_models, save_models, export_models_tsv, load_models, extract_similarity_matrix, export_vectors, featurize_to_corpus from wordfish.models import build_svm from wordfish.corpus import get_corpus, get_meta, subset_corpus from wordfish.terms import get_terms from wordfish.utils import mkdir import osInstallation of the application also write the environmental variable

WORDFISH_HOMEto your bash profile, so we can reference it easily:base_dir = os.environ["WORDFISH_HOME"]It is generally good practice to keep all components of an analysis well organized in the output directory. It makes sense to store analyses, models, and vectors:

# Setup analysis output directories analysis_dir = mkdir("%s/analysis" %(base_dir)) model_dir = mkdir("%s/models" %(analysis_dir)) vector_dir = mkdir("%s/vectors" %(analysis_dir))Wordfish then has nice functions for generating a corpus, meaning removing stop words, excess punctuation, and the typical steps in NLP analyses. The function

get_corpusreturns a dictionary, with the key being the unique id of the corpus (the folder name,tagof the original plugin). We can then use thesubset_corpusplugin if we want to split the corpus into the different groups (defined by the labels we specified in the initial data structure):# Generate more specific corpus by way of file naming scheme corpus = get_corpus(base_dir) reddit = corpus["reddit"] disorders = subset_corpus(reddit) corpus.update(disorders)We can then train corpus-specific models, meaning word2vec models.

# Train corpus specific models models = build_models(corpus)Finally, we can export models to tsv, export vectors, and save the model so we can easily load again.

# Export models to tsv, export vectors, and save save_models(models,base_dir) export_models_tsv(models,base_dir) export_vectors(models,output_dir=vector_dir)I want to note that I used gensim for learning and some methods. The work and examples from Dato are great!

Working with models

Wordfish provides functions for easily loading a model that is generated from a corpus:

model = load_models(base_dir)["neurosynth"]You can then do simple things, like find the most similar words for a query word:

model.most_similar("anxiety") # [('aggression', 0.77308839559555054), # ('stress', 0.74644440412521362), # ('personality', 0.73549789190292358), # ('excessive', 0.73344630002975464), # ('anhedonia', 0.73305755853652954), # ('rumination', 0.71992391347885132), # ('distress', 0.7141801118850708), # ('aggressive', 0.7049030065536499), # ('craving', 0.70202392339706421), # ('trait', 0.69775849580764771)]It’s easy to see that corpus context is important - here is finding similar terms for the “reddit” corpus:

model = load_models(base_dir)["reddit"] model.most_similar("anxiety") # [('crippling', 0.64760375022888184), # ('agoraphobia', 0.63730186223983765), # ('generalized', 0.61023455858230591), # ('gad', 0.59278655052185059), # ('hypervigilance', 0.57659250497817993), # ('bouts', 0.56644737720489502), # ('depression', 0.55617612600326538), # ('ibs', 0.54766887426376343), # ('irritability', 0.53977066278457642), # ('ocd', 0.51580017805099487)]Here are examples of performing addition and subtraction with vectors:

model.most_similar(positive=['anxiety',"food"]) # [('ibs', 0.50205761194229126), # ('undereating', 0.50146859884262085), # ('boredom', 0.49470821022987366), # ('overeating', 0.48451068997383118), # ('foods', 0.47561675310134888), # ('cravings', 0.47019645571708679), # ('appetite', 0.46869537234306335), # ('bingeing', 0.45969703793525696), # ('binges', 0.44506731629371643), # ('crippling', 0.4397256076335907)] model.most_similar(positive=['bipolar'], negative=['manic']) # [('nos', 0.36669495701789856), # ('adhd', 0.36485755443572998), # ('autism', 0.36115738749504089), # ('spd', 0.34954413771629333), # ('cptsd', 0.34814098477363586), # ('asperger', 0.34269329905509949), ('schizotypal', 0.34181860089302063), ('pi', 0.33561226725578308), ('qualified', 0.33355745673179626), ('diagnoses', 0.32854354381561279)] model.similarity("anxiety","depression") #0.67751728687122414 model.doesnt_match(["child","autism","depression","rett","cdd","young"]) #'depression'And to get the raw vector for a word:

model["depression"]Extracting term similarities

To extract a pairwise similarity matrix, you can use the function

extract_similarity_matrix. These are the data driven relationships between terms that the Wordfish infrastructure provides:# Extract a pairwise similarity matrix wordfish_sims = extract_similarity_matrix(models["neurosynth"])Classification

Finally, here is an example of predicting neurosynth abtract labels using the pubmed neurosynth corpus. We first want to load the model and meta data for neurosynth, meaning labels for each text:

model = load_models(base_dir,"neurosynth")["neurosynth"] meta = get_meta(base_dir)["neurosynth"]We can then use the

featurize_to_corpusmethod to get labels and vectors from the model, and thebuild_svmfunction to build a simple, cross validated classified to predict the labels from the vectors:vectors,labels = featurize_to_corpus(model,meta) classifiers = build_svm(vectors=vectors,labels=labels,kernel="linear")The way this works is to take a new post from reddit with an unknown label, use the Word2vec word embeddings vector as a lookup, and generating a vector for the new post based on taking the mean vector of word embeddings. It’s a simple approach, could be improved upon, but it seemed to work reasonably well.

Classification of Disorder Using Reddit

A surprisingly untapped resource are Reddit boards, a forum with different “boards” indicating a place to write about topics of interest. It has largely gone unnoticed that individuals use Reddit to seek social support for advice, for example, the Depression board is predominantly filled with posts from individuals with Depression writing about their experiences, and the Computer Science board might be predominantly questions or interesting facts about computers or being a computer scientist. From the mindset of a research scientist who might be interested in Reddit as a source of language, a Reddit board can be thought of as a context. Individuals who post to the board, whether having an “official” status related to the board, are expressing language in context of the topic. Thus, it makes sense that we can “learn” a particular language context that is relevant to the board, and possibly use the understanding of this context to identify it in other text. Thus, I built 36 word embedding models across 36 Reddit boards, each representing the language context of the board, or specifically, the relationships between the words. I used these models to look at context of words across different boards. I also build one master “reddit” model, and used this model in the classification framework discussed previously.

For the classification framework, it was done for two applications - predicting reddit boards from reddit posts, and doing the same, but using the neurosynth corpus as the Word2Vec model (the idea being that papers about cognitive neuroscience and mental illness might produce word vectors that are more relevant for reddit boards about mental illness groups). For both of these, the high level idea is that we want to predict a board (grouping) based on a model built from all of reddit (or some other corpus). The corpus used to derive the word vectors gives us the context - meaning the relationships between terms (and this is done across all boards with no knowledge of classes or board types), and then we can take each entry and calculate an average vector for it based on averaging the vectors of word embeddings that are present in the sentence. Specifically we:

1) generate word embeddings model (M) for entire reddit corpus (resulting vocabulary is size N=8842)

2) For each reddit post (having a board label like "anxiety":

- generate a vector that is an average of word embeddings in M

Then for each pairwise board (for example, “anxiety” and “atheist”

1) subset the data to all posts for “anxiety” and “atheist”

2) randomly hold out 20% for testing, rest 80% for training

3) build an SVM to distinguish the two classes, for each of rbf, linear, and poly kernel

4) save accuracy metricsResults

How did we do?

Can we classify reddit posts?

The full result has accuracies that are mixed. What we see is that some boards can be well distinguished, and some not. When we extend to use the neurosytnh database to build the model, we don’t do as well, likely because the corpus is much smaller, and we remember from the paper that larger corpus tends to do better.

Can we classify neurosynth labels?

A neurosynth abstract comes with a set of labels for terms that are (big hand waving motions) “enriched.” Thus,given labels for a paragraph of text (corresponding to the neurosynth term) I should be able to build a classifier that can predict the term. The procedure is the same as above: an abstract is represented as its mean of all the word embeddings represented. The results are also pretty good for a first go, but I bet we could do batter with a multi-class model or approach.

Do different corpus provide different context (and thus term relationships?)

This portion of the analysis used the Word2Vec models generated for specific reddit boards. Before I delved into classification, I had just wanted to generate matrices that show relationships between words, based on a corpus of interest. I did this for NeuroSynth, as well as for a large sample (N=121862) reddit posts across 34 boards, including disorders and random words like “politics” and “science.” While there was interesting signal in the different relationship matrices, really the most interesting thing we might look at is how a term’s relationships varies on the context. Matter of fact, I would say context is extremely important to think about. For example, someone talking about “relationships” in the context of “anxiety” is different than someone talking about “relationships” in the context of “sex” (or not). I didn’t upload these all to github (I have over 3000, one for each neurosynth term), but it’s interesting to see how a particular term changes across contexts.

Each matrix (pdf file) in the folder above is one term from neurosynth. What the matrix for a single term shows is different contexts (rows) and the relationship to all other neurosynth terms (columns). Each cell value shows the word embedding of the global term (in the context specified) against the column term. The cool thing for most of these is that we see general global patterns, meaning that the context is similar, but then there are slight differences. I think this is hugely cool and interesting and could be used to derive behavioral phenotypes. If you would like to collaborate on something to do this, please feel free to send me an email, tweet, Harry Potter owl, etc.

Conclusions

Wordfish provides standard data structures and an easy way to extract terms, corpus, and perform a classification analysis, or extract similarity matrices for terms. It’s missing a strong application. We don’t have anything suitable in cognitive neuroscience, at least off the bat, and if you might have ideas, I’d love to chat. It’s very easy to write a plugin to extend the infrastructure to another terminology or corpus. We can write one of those silly paper things. Or just have fun, which is much better. The application to deploy Wordfish plugins, and the plugins themselves are open source, meaning that they can be collaboratively developed by users. That means you! Please contribute!.

Limitations

The limitations have to do with the fact that this is not a finished application. Much fine tuning could be done toward a specific goal, or to answer a specific question. I usually develop things with my own needs in mind, and add functionality as I go and it makes sense.

Database

For my application, it wasn’t a crazy idea to store each corpus entry as a text file, and I had only a few thousand terms. Thus, I was content using flat text files to store data structures. I had plans for integration of “real” databases, but the need never arose. This would not be ideal for much larger corpus, for which using a database would be optimal. Given the need for a larger corpus, I would add this functionality to the application, if needed.

Deployment Options

Right now the only option is to generate a folder and install on a cluster, and this is not ideal. Better would be options to deploy to a local or cloud-hosted virtual machine, or even a Docker image. This is another future option.

Data

It would eventually be desired to relate analyses to external data, such as brain imaging data. For example, NeuroVault is a database of whole-brain statistical maps with annotations for terms from the cognitive atlas, and we may want to bring in maps from NeuroVault at some point. Toward this aim a separate wordfish-data repo has been added. Nothing has been developed here yet, but it’s in the queue.

And this concludes my first un-paper paper. It took an afternoon to write, and it feels fantastic. Go fish!

· -

So Badly

I want it so badly, I can’t breathe. The last time I had this feeling was right after interviewing at Stanford. My heart ached for the realization that the opportunity to learn informatics was everything I had ever wanted, and the chances were so slim of getting it. Before I had any prowess in programming and didn’t know what a normal distribution was, I had the insight that immersion in the right environment would push and challenge me exactly in the way I was hungry for. It is only when we surround ourselves by individuals who are more skilled, and in an environment with opportunity to try new things and take risks, that we grow in skill and in ourselves. When I compare the kind of “things” that I tried to build back in my first quarter to what I build now, I have confidence that I was right about this particular instinct. Now, in my last year or so of graduate school, I am again faced with uncertainty about the future. I am almost thirty - I feel so old, and in this context a part of me is tired of constantly needing to prove that I am good enough. I am defined by what I like to call the goldfish property. I am so devoted to the things that I love, mainly learning about new infrastructures and creating beautiful applications, and my stubborness is so great that I will tend to grow toward the size of my tank. I have the confidence that, despite at any moment not having prowess in some domain, if presented with challenge, and especially in an environment where I can observe the bigger fish, I will grow. What scares me is the fact that in order to gain entry to the larger ocean, we are judged as little fish. I am also terrified by a general realization about the importance of the choice of an environment. Each step slightly away from being around the kind of people that are amazing at the things I want to be amazing at is a small dimming of the light insight my heart, and too many steps away means a finality of regret and a present life of doing some sets of tasks that, maybe one might be good at, but they do not satisfy the heart.

This is why this crucial next step is so terrifying. I have confidence in the things that I want to be amazing at. I’m also a weird little fish, I don’t care about pursuring weekend fun, going on trips, learning about different cultures, or even starting a family. I just want to immerse my brain in a computer and build things. The challenges that I want are building infrastructure, meaning databases, cluster and cloud environments, virtual machines… essentially applications. I go nuts over using version control (Github), APIs, Continuous Integration, and working on software projects. I like to think about data structures, and standards, and although my training in machine learning supplements that, and I enjoy learning new algorithms that I can use in my toolbox, I don’t have drive to find ways to better optimize those algorithms. I want to be the best version of myself that I can possibly be - to return home at the end of each day and feel that I’ve milked out every last ounce of energy and effort is the ultimate satisfaction. I want to be able to build anything that I can dream of. To have and perform some contained skillset is not good enough. I don’t think I’ll ever feel good enough, and although this can sometimes sound disheartening, is the ultimate driver towards taking risks to try new things that, when figured out, lead to empowerment and joy. The terrifying thing about remaining in a small research environment is that I won’t be pushed, or minimally have opportunity, to become a badass at these things. I might use them, but in context of neuroscientists or biologists, to already feel like one of the bigger fish in using these technologies fills me with immense sadness. I’m perhaps proficient in neuroscience, but I’m not great because I’m more interested in trying to build tools that neuroscientists can use. Any individual can notice this in him or herself. We tend to devote time and energy to the things that we obsessively love, and while the other things might be necessary to learn or know, it’s easy to distinguish these two buckets because one grows exponentially, effortlessly, and the other one changes only when necessary. Growing in this bucket is an essential need, critical for happiness and fulfillment, and to slow down this growth and find oneself in a job performing the same skill set with no opportunity for growth leads to screaming inside ones head, and feeling trapped.

So, in light of this uncertainty, and upcoming change, I feel squirmy. I want to be an academic software engineer, but if I am rooted in academia I am worried about being a big fish, and I would not grow. I am an engineer at heart, and this does not line up with the things that an individual in academia is hungry to learn, which are more rooted in biological problems than the building of things. However, if I were to throw myself into some kind of industry, I feel like I am breaking a bond and established relationship and trust with the people and problems that I am passionate about solving. I love academia because it gives me freedom to work on different and interesting problems every day, freedom and control over my time and work space, and freedom to work independently. But on the other hand, it is much more challenging to find people that are great at the kinds of modern technologies that I want to be great at. It is much less likely to be exposed to the modern, bleeding edge technology that I am so hungry to learn, because academia is always slightly behind, and the best I can do is read the internet, and watch conference videos for hours each evening, looking for tidbits of cool things that I can find an excuse to try in some research project. Academia is also painfully slow. There is a pretty low bar for being a graduate student. We just have to do one thesis project, and it seems to me that most graduate students drag their feet to watch cells grow in petri dishes, complain about being graduate students, and take their sweet time supplemented with weekend trips to vineyards and concerts in San Francisco. I’ve said this before, but if a graduate student only does a single thesis project, unless it is a cure for cancer, I think they have not worked very hard. I also think it’s unfortunate that someone who might have been more immersed not get chosen in favor of someone else that was a better interviewer. But I digress. The most interesting things in graduate school are the projects that one does for fun, or the projects that one helps his or her lab with, as little extra bits. But these things still feel slow at times, and the publication process is the ultimate manifestation of this turtleness. When I think about this infrastructure, and that the ultimate products that I am to produce are papers, this feels discouraging. Is that the best way to have an impact, for example, in reproducible science? It seems that the sheer existence of Github has done more for reproducibility across all domains than any published, academic efforts. In that light, perhaps the place to solve problems in academia is not in academia, but at a place like Github. I’m not sure.

This dual need for a different environment and want to solve problems in a particular domain makes it seem like I don’t have any options. The world is generally broken into distinct, “acceptable” and well paved paths for individuals. When one graduates, he or she applies for jobs. The job is either in academia or industry. It is well scoped and defined. Many others have probably done it before, and the steps of progression after entry are also logical. In academia you either move through postdoc-ship into professor-dome, or you become one of those things called a “Research Associate” which doesn’t seem to have an acceptable path, but is just to say “I want to stay in academia but there is no real proper position for what I want to do, so this is my only option.” What is one to do, other than to create a list of exactly the things to be desired in a position, and then figure out how to make it? The current standard options feel lacking, and choosing either established path would not be quite right. If I were to follow my hunger to learn things more rooted in a “building things” direction, this would be devastating in breaking trust and loyalty with people that I care a lot about. It also makes me nervous to enter a culture that I am not familiar with, namely the highly competitive world of interviewing for some kind of engineering position. The thought of being a little fish sits on my awareness and an overwhelming voice says “Vanessa, you aren’t good enough.” And then a tiny voice comes in and questions that thought, and says, “Nobody is good enough, and it doesn’t matter, because that is the ocean where you will thrive. You would build amazing things.” But none of that matters if you are not granted entry into the ocean, and this granting is no easy thing. It is an overwhelming, contradictory feeling. I want to sit in a role that doesn’t exist - this academic software developer, there is no avenue to have the opportunities and environment of someone that works at a place like Google but still work on problems like reproducible science. I don’t know how to deal with it at this point, and it is sitting on my heart heavily. I perhaps need to follow my own instinct and try to craft a position that does not exist, one that I know is right. I must think about these things.

· -

The Academic Software Developer

To say that I have very strong feelings about standards and technology used in academic research would be a gross understatement. Our current research practices and standards for publication, sharing of data and methods, and reproducible science are embarrassingly bad, and it’s our responsibility to do better. As a gradate student, it seemed that the “right place” to express these sentiments would be my thesis, and so I poured my heart out into some of the introduction and later chapters. It occurred to me that writing a thesis, like many standard practices in academia, is dated to be slow and ineffective - a document that serves only to stand as a marker in time to signify completion of some epic project, to only have eyes laid upon it by possibly 4-6 people, and probably not even that many, as I’ve heard stories of graduate students getting away with copy pasting large amounts of nonsense between an introduction and conclusion and getting away with it. So should I wait many months for this official pile of paper to be published to some Stanford server to be forgotten about before it even exists? No thanks. Let’s talk about this here and now.

This reproducibility crisis comes down to interpretation - the glass can be half full or half empty, but it doesn’t really matter because at the end of the day we just need to pour more water in the stupid glass, or ask why we are wasting out time evaluating and complaining about the level of water when we could be digging wells. The metric itself doesn’t even matter, because it casts a shadow of doubt not only on our discoveries, but on our integrity and capabilities of scientists. Here we are tooting on about “big data” and publishing incremental changes to methods when what we desperately is need paradigm shifts in the most basic, standard practices for conducting sound research. Some people might throw their hands up and say “It’s too big of a problem for me to contribute.” or “The process is too political and it’s unlikely that we can make any significant change.” I would suggest that change will come slowly by way of setting the standard through example. I would also say that our saving grace will come by way of leadership and new methods and infrastructure to synthesize data. Yes, our savior comes by way of example from software development and informatics.

Incentives for Publication

It also does not come as a surprise that the incentive structure for conducting science and publishing is a little broken. The standard practice is to aggressively pursue significant findings to publish, and if it’s not significant, then it’s not sexy, and you can file it away in the “forgotten drawer of shame.” In my short time as a graduate student, I have seen other graduate students, and even faculty anguish over the process of publication. I’ve seen graduate students want to get out as quickly as possible, willing to do just about anything “for that one paper.” The incentive structure renders otherwise rational people into publication-hungry wolves that might even want to turn garbage into published work by way of the science of bullshit. As a young graduate student it is stressful to encounter these situations and know that it goes against what you consider to be a sound practice of science. It is always best to listen to your gut about these things, and to pursue working with individuals that have the highest of standards. This is only one of the reasons that Poldrack Lab is so excellent. But I digress. Given that our incentives are in check, what about the publications themselves?

Even when a result makes it as far as a published paper, the representation of results as static page does not stand up to our current technological capabilities. Why is it that entire careers can be made out of parsing Pubmed to do different flavors of meta-analysis, and a large majority of results seem to be completely overlooked or eventually forgotten? Why is a result a static thing that does not get updated as our understanding of the world, and availability of data, changes? We pour our hearts out into these manuscripts, sometimes making claims that are larger than the result itself, in order to make the paper loftier than it actually is. While a manuscript should be presented with an interesting story to capture the attention of others who may not have interest in a topic, it still bothers me that many results can be over-sensationalized, and other important results, perhaps null or non significant findings, are not shared. Once the ink has dried on the page, the scientist is incentivized to focus on pursuit on the next impressive p-value. In this landscape, we don’t spend enough time thinking about reproducible science. What does it mean, computationally, to reproduce a result? Where do I go to get an overview of our current understanding for some question in a field without needing to read all published research since the dawn of time? It seems painfully obvious to me that continued confidence in our practice of research requires more standardization and best practices for methods and infrastructure that lead to such results. We need informed ways to compare a new claim to everything that came before it.

Lessons from Software Development and Informatics

Should this responsibility for a complete restructuring of practices, the albatross for the modern scientist, be his burden? Probably this is not fair. Informatics, a subset of science that focuses on the infrastructure and methodology of a scientific discipline, might come to his aid. I came into this field because I’m not driven by answering biological questions, but by building tools. I’ve had several high status individuals tell me at different times that someone like myself does not belong in a PhD program, and I will continue to highly disagree. There is a missing level across all universities, across all of academia, and it is called the Academic Software Developer. No one with such a skillset in their right mind would stay in academia when they could be paid two to three fold in industry. Luckily, some of us either don’t have a right mind, or are just incredibly stubborn about this calling that a monetary incentive structure is less important than the mission itself. We need tools to both empower researchers to assess the reproducibility of their work, and to derive new reproducible products. While I will not delve into some of the work I’ve done in my graduate career that is in line with this vision (let’s save that for thesis drivelings), I wlll discuss some important observations about the academic ecosystem, and make suggestions for current scientists to do better.

Reproducibility and Graduate Students

Reproducibility goes far beyond the creation of a single database to deposit results. Factors such as careful documentation of variables and methods, how the data were derived, and dissemination of results unify to embody a pattern of sound research practices that have previously not been emphasized. Any single step in an analysis pipeline that is not properly documented, or does not allow for a continued life cycle of a method or data, breaks reproducibility. If you are a graduate student, is this your problem? Yes it is your problem. Each researcher must think about the habits and standards that he or she partakes in from the initial generation of an idea through the publishing of a completed manuscript. On the one hand, I think that there is already a great burden on researchers to design sound experiments, conduct proper statistical tests, and derive reasonable inferences from those tests. Much of the disorganization and oversight to sound practices could be resolved with the advent of better tools such as resources for performing analysis, visualizing and capturing workflows, and assessing the reproducibility of a result. On the other hand, who is going to create these tools? The unspoken expectation is that “This is someone else’s problem.” Many seem to experience tunnel vision during graduate school. There is no reality other than the individual’s thesis, and as graduate students we are protected from the larger problems of the community. I would argue that the thesis is rather trivial, and if you spend most of your graduate career working on just one project, you did not give the experience justice. I don’t mean to say that the thesis is not important, because graduation does not happen without its successful completion. But rather, graduate school is the perfect time to throw yourself into learning, collaborating on projects, and taking risks. If you have time on the weekends to regularly socialize, go to vineyards, trips, and consistently do things that are not obsessively working on the topic(s) that you claimed to be passionate about when applying, this is unfortunate. If you aim to get a PhD toward the goal of settling into a comfy, high income job that may not even be related to your research, unless you accomplished amazing things during your time as a young researcher, this is also unfortunate. The opportunity cost of these things is that there is probably someone else in the world that would have better taken advantage of the amazing experience that is being a graduate student. The reason I bring this up is because we should be working harder to solve these problems. With this in mind, let’s talk about tiny things that we can do to improve how we conduct research.

The components of a reproducible analysis

A reproducible analysis, in its truest definition, must be easy to do again. This means several key components for the creation and life cycle of the data and methods:

- complete documentation of data derivation, analysis, and structure

- machine accessible methods and data resources

- automatic integration of data, methods, and standards

A truly reproducible analysis requires the collection, processing, documentation, standardization, and sound evaluation of a well-scoped hypothesis using large data and openly available methods. From an infrastructural standpoint this extends far beyond requiring expertise in a domain science and writing skills, calling for prowess in high performance computing, programming, database and data structure generation and management, and web development. Given initiatives like the Stanford Center for Reproducibile Neuroscience, we may not be too far off from “reproducibility as a service.” This does not change the fact that reproducibility starts on the level of the individual researcher.

Documentation