-

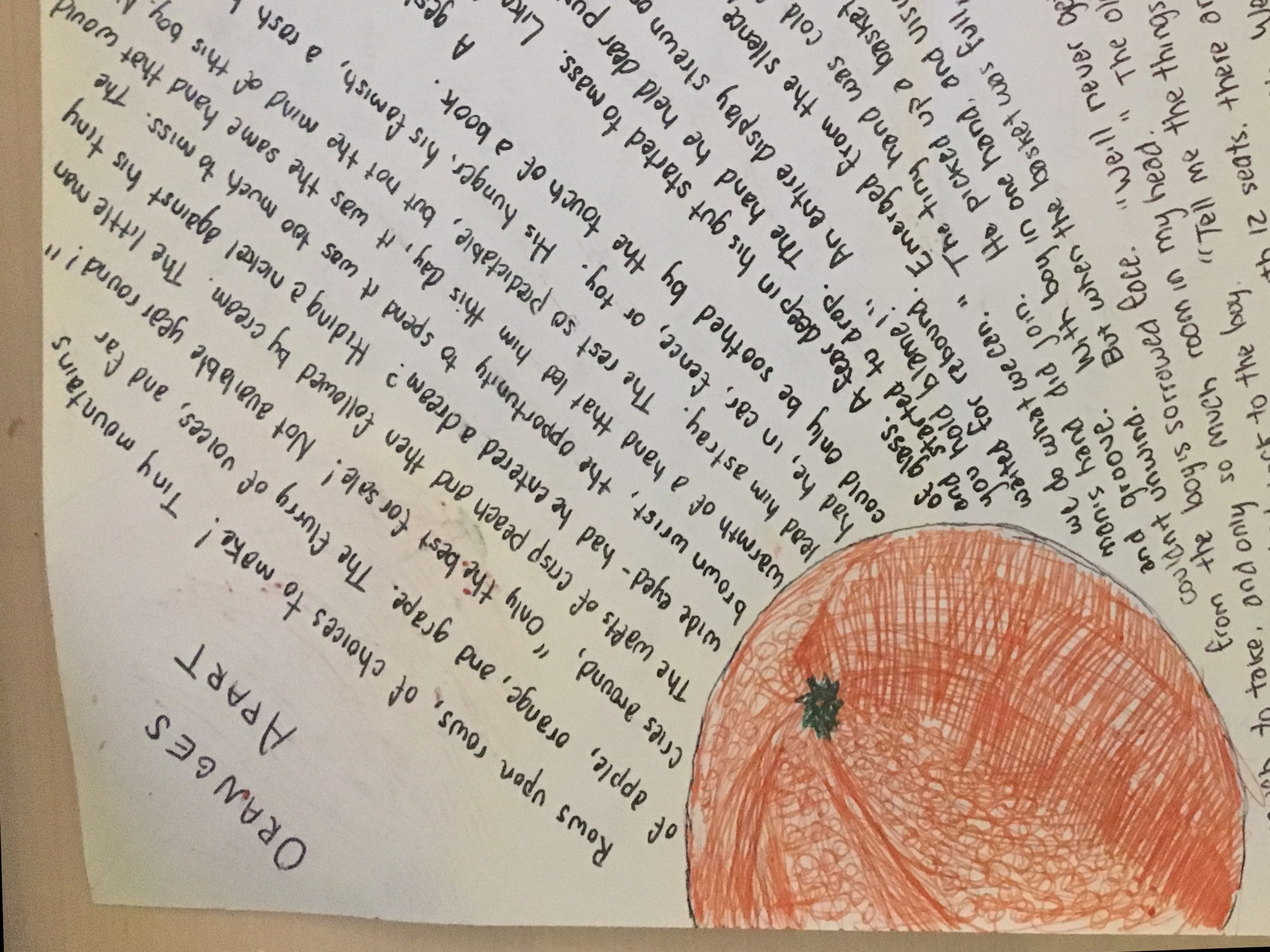

Oranges Apart

Rows upon rows, of choices to make! Tiny mountains of apple, orange and grape. The flurry of voices, and far cries around, “Only the best for sale! Not available year round!” The wafts of crisp peach, and then followed by cream. The little man was wide eyed - had he entered a dream? Hiding a nickel against his tiny brown wrist, the opportunity to spend it was too much to miss. The warmth of a hand that led him this day, it was the same hand that would lead him astray. The rest so predictable, but not the mind of this boy. No interest had he, in car, fence, or toy. His hunger, his famish, a rash burned in his look could only be soothed by the touch of a book.

A gesture toward fruit, and then a breaking of glass. A fear deep in his gut started to mass. Like full pots of curry rising to top, the tears bubbled and started to drop. The hand he held dear pushed him away. “You are too young boy, you put me to shame!” An entire display strewn onto ground. The life of the market held breath, and waited for sound.

Emerged from the silence, an old man stretched out his hand. “Why boy, do not mourn, we do what we can.” The tiny hand was cold from clenching the coin, and it is with this knob the old man’s hand did join. He picked up a basket, and started to move, picking up orange from hole, foot, and groove. With boy in one hand, and vision behind, there wasn’t a single doubt he couldn’t unwind. But when the basket was full, and it wasn’t enough space, the hope quickly withdrew from the boy’s sorrowed face.

“We’ll never get them all,” the boy forlongingly said. There is so much to take, and only so much room in my head. The old man looked down at the basket, curiosity in his eye. He looked back to the boy. “Tell me cause for this long, lonely sigh?”

“Our basket is sized according to rule. As with 12 seats, there are only 12 allowed in school. The ones we have chosen, are the ones we must take. There is no going back, we have made final stake. And yet you’ve chosen the ones that seem to be brown. They are freckled and different, they carry no crown. If I am to use my earnings on such, my father will be disappointed to lose so much.

The rush of his tears filled up the place, they covered the market, every corner and space. The amount to be known, was too much to take, but without such understanding, no scholar he’d make. The oranges were lost, reduced to the dirt by his feet, and the expectations of himself for the market he’d never meet. Amongst the smell of citrus, sun, and self doubt, his lips curled into a long final pout.

Now the old man, you see, also carried this pain. But he knew that personal condemnation had nothing to gain.

The fruit is prolific, he said with a smile. The joys that you might feel, they are equaled by sorrows. You might have one today, but it won’t be the same one tomorrow. You will never truly have one; from time you cannot borrow. But the experience of the flesh, the sweet juice run down your face. This joy belongs to you, it need not carry time or have place. Your evaluation of scope, you think you find rule. And perhaps this is how they teach you in school? But when one is great, must two then be better? What is one and two, but concepts of matter? The oranges you see, are not for the eye. Yet this is how you judge them, and by counting your pie. The colored bumps of brown, black and gold, indeed be sign that the fruit might be old. What you do not see, unless you take chance, is the way they tickle the tongue, the flavors that dance. It cannot be written in any scholarly pen. The nows take all preference, and never the when.

The boy understood when he looked down at his feet. His sampling of the space was never complete. But this was the beauty of life, not picking up all fruit to take. The cupfulls of unknown make for more interesting cake. The uncertainty hits hard, direct like a shot, and only this force can influence your plot. The fruit left behind carries no state. It is this truth left behind that you cannot partake.

He left from the market; he found what he sought. Unexpected by all, just one orange he bought. The burden of price was no longer useful to him, nor the incessant expectation to react to such whim. It was the best purchase he’d made, because it wasn’t driven by fear. It was his acceptance of blindness that would make him a seer. The journey for himself was his knowledge and heart. And he’d found the answer one orange apart.

for my dear friend

· -

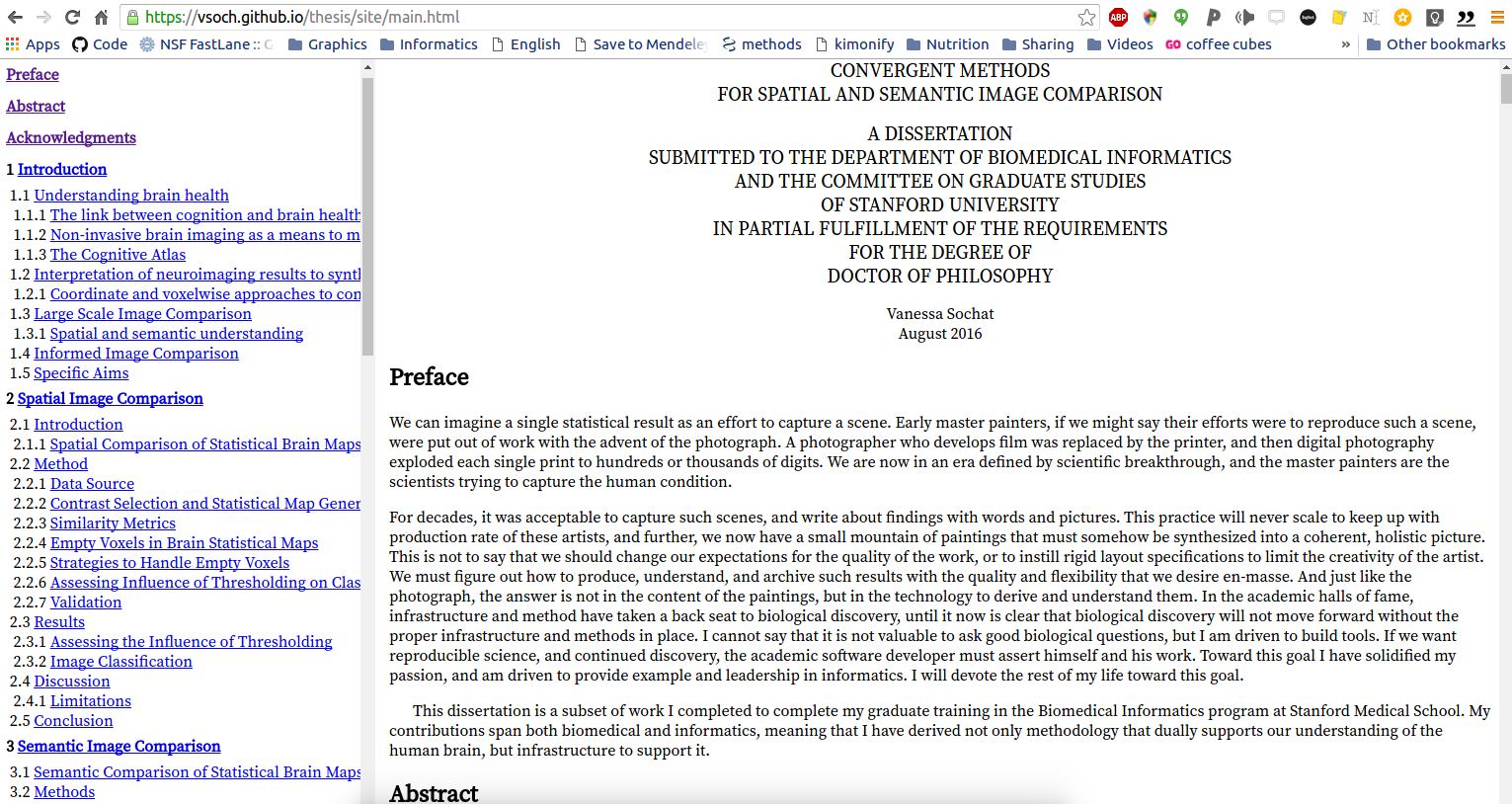

The Research Container Standard

Containers, on the level of the operating system, are like houses. We carry an expectation that we find food in the kitchen, a bed in a bedroom, and toiletries in a bathroom. We can imagine a fresh Ubuntu image is akin to a newly furnished house. When you shell in, most of your expectations are met. However, as soon as a human variable is thrown into the mix (we move in), the organization breaks. Despite our best efforts, the keys sometimes end up in the refrigerator. A sock becomes a lone prisoner under a couch cushion. The underlying organization of the original house is still there with the matching expectations, but we can no longer trust it. What do I mean? If I look at a house from the outside and someone asks me “Are the beds in the bedroom?” I would guess yes. However, sometimes I might be wrong, because we are looking at a Bay Area house that has three people residing in a living area.

Now imagine that there is a magical box, and into it I can throw any item, or ask for any item, and it is immediately retieved or placed appropriately. Everything in my house has a definitive location, and there are rules for new items to follow suit. I can, at any moment, generate a manifest of everything in the house, or answer questions about the things in the house. If someone asks me “Are the beds in the bedroom?” knowing that this house has this box, I can answer definitively “yes!”

The house is the container, and the box represents what a simple standard and software can do for us. In this post I want to discuss how our unit of understanding systems has changed in a way that does not make it easy for reproducibility and scalable modularity to co-exist in harmony.

Modular or Reproducibile?

For quite some time, our unit of understanding has been based on the operating system. It is the level of magnification at which we understand data, software, and products of those two things. Recently, however, two needs have arisen.

We simultaneously need modularity and reproducible practices. At first glance, these two things don’t seem very contradictory. A modular piece of software, given that all dependencies are packaged nicely, is very reproducible. The problem arises because it’s never the case that a single piece of software is nicely suited for a particular problem. A single problem, whether it be sequencing genetic code, predicting prostate cancer recurrence from highly dimensional data, or writing a bash script to play tetris, requires many disparate libraries and other software dependencies. Given our current level of understanding of information, the operating system, the best that we can do is give the user absolutely everything - a complete operating system with data, libraries, and software. But now for reproducibility we have lost modularity. A scientific software packaged in a container with one change to a version of a library yields a completely different container despite much of the content duplicated. We are being forced to operate on a level that no longer makes sense given the scale of the problem, and the dual need for modularity and dependency. How can we resolve this?

Level of Dimensionality to Operate

The key to answering this question is deciding on the level, or levels, of dimensionality that we will operate. On one side of the one extreme, we might break everything into the tiniest pieces imaginable. We could say bytes, but this would be like saying that an electron or proton is the ideal level to understand matter. While electrons and protons, and even one level up (atoms) might be an important feature of matter, arguably we can represent a lot more consistent information by moving up one additional level to a collection of atoms, an element. In file-system science an atom matches to a file, and an element to a logical grouping of files to form a complete software package or scientific analysis. Thus we decide to operate on the level of modular software packages and data. We call these software and data modules, and when put together with an operating system glue, we get a full containers. Under this framework we make the following assertions:

a container is the packaging of a set of software and data modules, reproducible in that all dependencies are included

building multiple containers is efficient because it allows for re-use of common modules

a file must not encompass a collection of compressed or combined files. I.e., the bytes content

each software and data module must carry, minimally, a unique name and install location in the systemThis means that the skeleton of a container (the base operating system) is the first decision point. This will filter down a particular set of rules for installation locations, and a particular subset of modules that are available. Arguably, we could even take organizational approaches that would work across hosts, and this would be especially relevant for data containers that are less dependent on host architecture. For now, let’s stick to considering them separately.

Operating System --> Organization Rules --> Library of Modules --> [choose subset] --> New ContainerUnder this framework, it would be possible to create an entire container by specifying an operating system, and then adding to it a set of data and software containers that are specific to the skeleton of choice. A container creation (bootstrap) that has any kind of overlap with regard to adding modules would not be allowed. The container itself is completely reproducible because it (still) has included all dependencies. It also carries complete metadata about its additions. The landscape of organizing containers also becomes a lot easier because each module is understood as a feature.

TLDR: we operate on the level of software and data modules, which logically come together to form reproducible containers.

Metric for Assessing Modules

Given that a software or data module carries one or more signatures, the next logical question is about the kinds of metrics that we want to use to classify any given module.

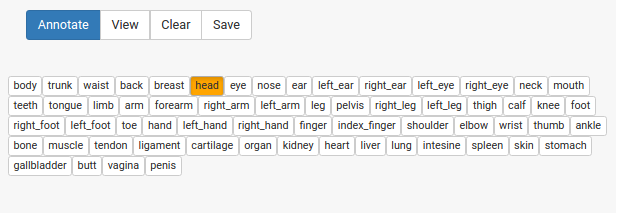

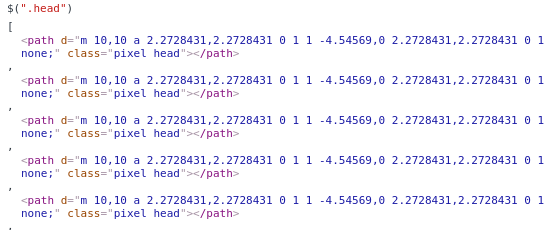

Manual Annotation

The obvious approach is the human labeled organization, meaning that a person looks at a software package, calls it “biopython” for “biology” in “python” and then moves on. Or perhaps it is done automatically based on the scientists domain of work, tags from somewhere, or a journal published in. This metric works well for small, manageable projects, but is largely unreliable as it is hard to scale or maintain.

Functional Organization

The second is functional organization. We can view software as a black box that performs some task, and rank/sort the software based on comparison of that performance. If two different version of a python module act exactly the same, despite subtle differences in the files (imagine the silliest case where the spacing is slightly different) they are still deemed the same thing. If we define a functional metric likes “calculates standard deviation” and then test software across languages to do this, we can organize based on the degree to which each individual package varies from the average. This metric maps nicely to scientific disciplines (for which the goal is to produce some knowledge about the world. However if this metric is used, the challenge would be for different domains to be able to robustly identify the metrics most relevant, and then derive methods for measuring these metrics across new software. This again is a manual bottleneck that would be hard to overtake. Even if completely programmatic, data driven approaches existed for deriving features of these black boxes, without the labels to make those features into a supervised classification task, we don’t get very far.

File Organization and Content

A third idea is a metric not based on function or output, but simple organizational rules. We tell the developer that we don’t care what the software package does, or how it works, but we assert that it must carry a unique identifier, and that identifier is mapped to a distinct location on a file system. With these rules, it could be determined immediately if the software exists on a computer, because it would be found. It would be seamless to install and use, because it would not overwrite or conflict with other software. It would also allow for different kinds of (modular) storage of data and software containers.

For the purposes of this thinking, I propose that the most needed and useful schema is functional, but in order to get there we must start with what we already have: files and some metadata about them. I propose the following:

Step 1 is to derive best practices for organization, so minimally, given a particular OS, a set of software and data modules have an expected location, and some other metadata (package names, content hashes, dates, etc.) about them.

Step 2, given a robust organization, is to start comparing across containers. This is where we can do (unsupervised) clustering of containers based on their modules.

Step 3, given an unsupervised clustering, is to start adding functional and domain labels. A lot of information will likely emerge with the data, and this is the step I don’t have vision for beyond that. Regardless of the scientific questions (which others vary in having interest in) they are completely reliant on having a robust infrastructure to support answering them.The organization (discussed more below) is very important because it should be extendable to as many operating system hosts as possible, and already fit into (what exist/are) current cluster file-systems. We should take an approach that affords operating systems designing themselves. E.g., imagine someday that we can do the following:

We have a functional goal. I want an operating system (container) optimized to do X. I can determine if X is done successfully, and to what degree.

We start with a base or seed state, and provide our optimization algorithm with an entire suite of possible data and software packages to install.

We then let machine learning do it’s thing to figure out the optimized operating system (container) given the goal.Since the biggest pain in creating containers (seems to be) the compiling and “getting stuff to work” part, if we can figure out an automated way to do this, one that affords versioning, modularity, and transparency, we are going to be moving in the right direction. It would mean that a scientist could just select the software and data he/she wants from a list, and a container would be built. That container would be easily comparable, down the difference in software module verison, to another container. With a functional metric of goodness, the choices of data and software could be somewhat linked to the experimential result. We would finally be able to answer questions like “Which version of biopython produces the most varying result? Which brain registration algorithm is most consistent? Is the host operating system important?

If we assume that these are important questions to be able to answer, and that this is a reasonable approach to take, then perhaps we should start by talking about file system organization.

File Organization

File organization is likely to vary a bit based on the host OS. For example, busybox has something like 441 “files” and most of them are symbolic links. Arguably, we might be able to develop an organizational schema that remains (somewhat) true to the Filesystem Hierarchy Standard, but is extendable to operating systems of many types. I’m not sure how I feel about this standard given that someday we will likely have operating systems designing themselves, but that is a topic for another day.

Do Not Touch

I would argue that the following folders, most scientific software should not touch:

/boot: boot loader, kernel files/bin: system-wide command binaries (essential for OS)/etc: host-wide configuration files/lib: again, system level libraries/root: root’s home. Unless you are using Docker, putting things here leads to trouble./sbin: system specific binaries/sys: system, devices, kernel features

Variable and Working Locations

/run: run time variables, should only be used for that, during running of programs./tmp: temporary location for users and programs to dump things./home: can be considered the user’s working space. Singularity mounts by default, so nothing would be valued there. The same is true for..

Connections

Arguably, connections for containers are devices and mount points. So the following should be saved for that:

/dev: essential devices/mnt: temporary mounts./srv: is for “site specific data” served by the system. Perhaps this is the logical mount for cluster resources?

The point that “connections” also means mounting of data has not escaped my attention. This is an entire section of discussion.

Data

This is arguably just a mount point, but I think there is one mount root folder that is perfectly suited for data:

/media: removable media. This is technically something like a CD-ROM or USB, and since these media are rare to use, or used to mount drives with data, perhaps we can use it for exactly that.Data mounted for a specific application should have the same identifier (top folder) to make the association. The organization of the data under that location is up to the application. The data can be included in the container, or mounted at runtime, and this is under the decision of the application. Akin to software modules, overlap in modules is not allowed. For example, let’s say we have an application called bids (the bids-apps for neuroimaging):

the bids data would be mounted / saved at

/media/bids.

importing of distinct data (subjects) under that folder would be allowed, eg/media/bids/sub1and/media/bids/sub2. importing of distinct data (within subject) would also be allowed, e.g.,/media/bids/sub1/T1and/media/bids/sub1/T2.

importing of things that get overwritten would not be allowed.An application’s data would be traceable to the application by way of it’s identifier. Thus, if I find

/media/bidsI would expect to find either/opt/bidsor equivalent structure under/usr/local(discussed next).Research Software

Research software is the most valuable element, along with data, and there are two approaches we can take, and perhaps define criteria for when to use each. There must be general rules for packaging, naming, and organizing groups of files. The methods must be both humanly interpretable, and machine parsable. For the examples below, I will reference two software packages, singularity and sregistry:

Approach 1: /usr/local

For this approach, we “break” packages into shared sub-folders, stored under

/usr/local, meaning that executables are in a shared bin:/usr/local/ /bin/ singularity sregistryand each software has it’s own folder according to the Linux file-system standard (based on its identifier) under

/usr/local/[ name ]. For example, for lib:/usr/local/lib singularity/ sregistry/The benefits of this approach are that we can programatically identify software based on parsing

/usr/local, and just need one/binon the path. We also enforce uniqueness of names, and have less potential for having software with the same name in different bins (and making the determinant of which to use based on the$PATH). The drawbacks are that we have a harder time removing something from the path, if that is needed, and it will not always be the case that all programs need all directories. If we are parsing a system to discover software, for example, we would need to be very careful not to miss something. This is rationale for the next approach.Approach 2: /opt

This is a modular approach that doesn’t share the second level of directories. The

/optbin is more suited to what we might call a modern “app”. For this approach, each installed software would have it’s own sub-folder. In the example of using singularity and sregistry:/opt/ singularity/ sregistry/and then under each, the software could take whatever approach is necessary (in terms of organization) to make it work. This could look a lot like small clones of the Linux file system standard, eg:

/opt/ singularity/ /bin /lib /etc /libexecor entirely different

/opt/ singularity/ /modules /functions /contribThe only requirement is that we would need a way / standard to make the software accessible on the path. For this we could do one of the following:

require a

bin/folder with executables. The sub-folders in/optwould be parsed for/binand the bin folders added to the path. This would work nicely with current software distributed, which tends to have builds dumped into this kind of hierarchy.

In the case that the above is not desired because not all applications conform to having a bin, then the application would need to expose some environment variable / things to add to the$PATHto get it working.Approach 3: /opt with features of /usr/local

If the main problem with

/optis having to find/add multiple things to the path, there could be a quasi solution that places (or links) main executables in a main/binunder/opt. Thus, you can add one place to the path, and have fine control over the programs on the path by way of simply adding/removing a link. This also means that the addition of a software module to a container needs to understand what should be linked.Submodules

We are operating on the level of the software (eg, python, bids-app, or other). What about modules that are installed to software? For example, pip is a package manager that installs to python. Two equivalent python installations with different submodules are, by definition, different. We could take one of the following approaches:

represent each element (the top level software, eg python) as a base, and all submodules (eg, things installed with pip) are considered additions. Thus, if I have two installations of python with different submodules, I should still be able to identify the common base, and then calculate variance from that base based on differences between the two.

Represent each software version as a base, and then, for each distinct (common) software, identify a level of reproducibility. Comparison of bases would look at the core base files, while comparison of modules would look across modules and versions, and comparison within a single module would look across all files in the module.The goal would be to be able to do the following:

quickly sniff the software modules folder to find the bases. The bases likely have versions, and the version should ideally be reflected in the folder name. If not, we can have fallback approaches to finding it, and worse case, we don’t. Minimally, this gives us a sense of the high level guts of an image.

If we are interested in submodules, we then do the same operation, but just a level deeper within the site-packages of the base.

if we are interested in one submodule, then we need to do the same comparison, but across different versions of a package.Metadata

As stated above, a software or data module should have a minimal amount of metadata:

unique identifier, that includes the version

a content hash of it’s guts (without a timestamp)

(optionally, if relevant) a package manager

(optionally, if relevant) where the package manager installs toPermissions

Permissions are something that seem to be very important, and likely there are good and bad practices that I could image. Let’s say that we have a user, on his or her local machine. He or she has installed a software module. What are the permissions for it?

- Option 1 is to say “they can be root for everything, so just be conservative and require it.” A user on the machine that is not sudo, too bad. This is sort of akin to maintaining and all or nothing binary permission, but for one person, that might be fine. Having this one (more strict) level, as long as it’s maintained, wouldn’t lead to confusion between user and root space, because only operation in root space is allowed.

- Option 2 is to say “it’s just their computer, we don’t need to be so strict, just change permissions to be world read/write/execute.” This doesn’t work, of course, for a shared resource where someone could do something malicious by editing files.

- Option 3 is to say “we should require root for some things, but then give permissions just to that user” and then of course you might get a weird bug if you switch between root/user, sort of like Singularity has some times with the cache directory. Files are cached under

/rootwhen a bootstrap is run as root, but under the user’s home when import is done in user space.

I wish that we lived in a compute world where each user could have total control over a resource, and empowered to break and change things with little consequences. But we don’t. So likely we would advocate for a model that supports that - needing root to build and then install, and then making it executable for the user.

Overview

A simple approach like this:

fits in fairly well with current software organization

is both modular for data and software, but still creates reproducible containers

allows for programmatic parsing to be able to easily find software and capture the contents of a container.We could then have a more organized base to work from, along with clearer directions (even templates) for researchers to follow to create software. In the context of Singularity containers, these data and software packages become their own thing, sort of like Docker layers (they would have a hash, for example) but they wouldn’t be random collections of things that users happened to put on the same line in a Dockerfile. They would be humanly understood, logically grouped packages. Given some host for these packages (or a user’s own cache that contains them) we can designate some uri (let’s call it

data://that will check the user’s cache first, and then the various hosted repositories for these objects. A user could addanaconda3for a specific version to their container (whether the data is cached or pulled) like:import data://anaconda3:latestAnd a user could just as easily, during build time, export a particular software or data module for his or her use:

export data://mysoftware:v1.0and since the locations of mysoftware for the version would be understood given the research container standard, it would be found and packaged, put in the user’s cache (and later optionally / hopefully shared for others).

This would also be possible not just from/during a bootstrap, but from a finished container:

singularity export container.img data://anaconda3:latestI would even go as far to say that we stay away from system provided default packages and software, and take preference for ones that are more modular (fitting with our organizational schema) and come with the best quality package managers. That way, we don’t have to worry about things like “What is the default version of Python on Ubuntu 14.04? Ubuntu 14.06? Instead of a system python, I would use anaconda, miniconda, etc.

Challenges

Challenges of course come down to:

symbolic links of libraries, and perhaps we would need to have an approach that adds things one at a time, and deals with potential conflicts in files being updated.

reverse “unpacking” of a container. Arguably, if it’s modular enough, I should be able to export an entire package from a container.

configuration: we would want configuration to occur after the addition of a new piece, calling ldconfig, and then add the next, or things like that.

the main problem is library dependencies. How do we integrate package managers and still maintain the hierarchy?One very positive thing I see is that, at least for Research Software, a large chunk of it tends to be open source, and found freely available on Github or similar. This means that if we do something simple like bring in an avenue to import from a Github uri, we immediately include all of these already existing packages.

First Steps

I think we have to first look at the pieces we are dealing with. It’s safe to start with a single host operating system, Ubuntu is good, and then look at the following:

what changes when I use the package manager (apt-get) for different kinds of software, the same software with different versions

how are configurations and symbolic links handled? Should we skip package managers and rely on source? (probably not)

how would software be updated under our schematic?

where would the different metadata/ metrics be hosted?to one that does not

Organization and simple standards that make things predictible (while still allowing for flexibility within an application) is a powerful framework for reproducible software, and science. Given a standard, we can build tools around it that give means to test, compare, and make our containers more trusted. We never have to worry about capturing our changes to decorating the new house, because we decorate with a tool that captures them for us.

I think it’s been hard for research scientists to produce software because they are given a house, and told to perform some task in it, but no guidance beyond that. They lose their spare change in couches, don’t remember how they hung their pictures on the wall, and then get in trouble when someone wants to decorate a different house in the same way. There are plenty of guides for how to create an R or Python module in isolation, or in a notebook, but there are minimal examples outlined or tools provided to show how a software module should integrate into it’s home.

I also think that following the traditional approach of trying to assemble a group, come to some agreement, publish a paper, and wait for it to be published, is annoying and slow. Can open source work better? If we work together on a simple goal for this standard, and then start building examples and tools around it, we can possibly (more quickly) tackle a few problems at once.

1.the organizational / curation issue with containers

2.the ability to have more modularity while still preserving reproducibility, and

3.a base of data and software containers that can be selected to put into a container with clicks in a graphical user interface.Now if only houses could work in the same way! What do you think?

· -

The Counting Man

She pulled the papery dragon insect from her nose. It’s hyper-compressed form exploded instantly into an airy, delicate pattern of scales and space, and it flew around the room before perching on top of the bookshelf. She had been a sick child, and until she figured out that these dragons grew in her nose, life had been confusing and hard. It made it harder that no one, not even her parents, believed in the existence of her dragons, and she had no way to show them. They would emerge in her solitary living space, and fly out the window to hide in the trees to live a shady, verdant life. Did they know something that she did not? It was back in that window when she spent a lot of time wandering around the city. In the reflections of her face in the shop windows she was reminded of being in the present. But her mind mirrored the setting sun and the reflections on the puddles of the water that had not yet come to be. This was her present. She was constantly thinking of her future, and ruminating about her past.

On a cold December morning, she skipped her flying lessons to pay a visit to the old man that counted things. He lived three paces left of the best smelling bakery in the city, and just underneath one’s nose when the smell of strawberry and dough reached a nutty sweetness that indicates done-ness. If you counted one half a step too far, you would surely miss him. If the pastries burnt, you had already gone too far.

Nobody knew where he came from, how he persisted, or even how old he was. When the city governor miscalculated the earnings to expenditures of the city, a visit to the old man cleared the digital slate. When a distracted goose misplaced her goslings, the old man was just a waddle away to account for them properly. The girl imagined that he sustained on the gears turning in his head, the infinite space of numbers that gave him beauty and meaning, and the leftover croissants from the bakery.

On that afternoon, even the drones were not flying, and the girl was stuck in a while loop unable to let go of her conditions of the past. Thus, her desire to visit the counting man wasn’t to actually perform addition and subtraction of objects, but because she might count on having his company during this time of loneliness. His space was in perfect logical order, and in parallel, in complete unaccounted-for chaos. Today he had arranged a surface of glass shapes, and had removed the ground under them so you might fall to smithereens if you slipped into a margin of error. A wave of chill crashed over the small of her lower back. it was a situation of danger. But when you have a mindset like the girl, a risk that might topple some internal barrier presents itself only as exciting opportunity. And so she stepped forward onto the surface.

Her moves were cautious at first, pressing gently on the glossy shapes to estimate how well they liked her. But soon, she felt her heart relax, her mind release into the rhythm, and she gave in to allowing the stones to capture the memory of her feet. She did this until exhaustion, and then stepped off of the glass back onto the crisp, well-defined apple earth. Her eyes moved from her exposed toes to the feet, knees, and finally face, of the old counting man.

“Can you tell me how many?” she asked. Although she had lost track of time, she was confident that she had done it so quickly - and imagined her dance as a well-scoped problem to tackle to ensure that her strategy was robust. She had hit the smaller states of the glass continent by brushing her feet over them in a horizontal motion, and having reassurance of her influence when they glowed and smiled.

The old man also smiled. “You danced over great depths, and gave the glass much memory today. Perhaps you should come back tomorrow, and I might count then.”

This practice continued, day after day, and the girl learned to dance genuinely. The shapes would dance with her sometimes too, and change their locations, as shapes often like to do. She approached the same task with a clean pair of feet each day, and a new trick in her mind for how to make sure she covered all the space. It wasn’t until the following December that the old man added note to the end of their daily routine.

“You know,” added the old man. Today you’ve visited me 365 times. I think to make the calculation easier, I’ll call that “1.” Maybe we should do this again sometime?”

As the girl opened her lungs to release enough air to respond with “Yes, we should!” for the first time in the presence of another, a tiny dragon emerged from her nose. It’s tiny body, painted with red and gold, flew to perch on the old counting man’s right ear. A beat of uncertainty punched her in the stomach, and she was both surprised and terrified of exposing her deepest vulnerability. “Such a small spirit,” he responded calmly. “but within it is accrued such vibrancy and hope.” It was then that the girl again found control over the air to expel the message curled in her neck.

“Yes, I’ve never seen one like that before. And I of course will continue to visit. But I do not wish to count, because I will be dancing.”

Neither needed to say more. The old man stretched out his pinky, an offering to the tiny dragon to sit on, and he streamed the dragon from his ear toward a tree where he might enjoy watching the leaves grow. Both he and she knew that it wasn’t about the counting at all. The girl first went to visit the old man in hopes that it might alleviate her loneliness, a hidden desire behind searching for the secrets of the bakery. She visited him again because the dancing gave her a parcel of meaning. She continued to visit because she had found herself in the movements of her feet.

“My dear friend,” the girl said to the counting man one day. “The sun rises and falls, and someone, somewhere, is apologizing to their selves of the past, feeling loss for the dances not done, and destroying the present with rumination about a future that is never truly reached.” She paused, anticipating some sign that he knew that this insight could only come from personal experience. “Is this an optimal way to live one’s life?”

“It might be, for some,” he responded. “But for you, you just keep dancing.”

It is only when the girl stopped counting did she realize that she could count on the things that gave her meaning, and old man time would manage the rest. And little did she know, he had come to count on her too.

· -

Containers for Academic Products

Why do we do research? Arguably, the products of academia are discoveries and ideas that further the human condition. We want to learn things about the world to satisfy human curiosity and need for innovation, but we also want to learn how to be happier, healthier, and generally maximize the goodness of life experience. For this reason, we give a parcel of our world’s resources to people with the sole purpose of producing research in a particular domain. We might call this work the academic product.

The Lifecycle of the Academic Product

Academic products typically turn into manuscripts. The manuscripts are published in journals, ideally the article has a few nice figures, and once in a while the author takes it upon him or herself to include a web portal with additional tools, or a code repository to reproduce the work. For most academic products, they aren’t so great, and they get a few reads and then join the pile of syntactical garbage that is a large portion of the internet. For another subset, however, the work is important. If it’s important and impressive, people might take notice. If it’s important but not so impressive, there is the terrible reality that these products go to the same infinite dumpster, but they don’t belong there. This is definitely an inefficiency, and let’s step back a second here and think about how we can do better. First, let’s break down these academic product things, and start with some basic questions:

- What is the core of an academic product?

- What does the ideal academic product look like?

- Why aren’t we achieving that?

What is the core of an academic product?

This is going to vary a bit, but for most of the work I’ve encountered, there is some substantial analysis that leads to a bunch of data files that should be synthesized. For example, you may run different processing steps for images on a cluster, or permutations of a statistical test, and then output some compiled thing to do drumroll your final test. Or maybe your final thing isn’t a test, but a model that you have shown can work with new data. And then you share that result. It probably can be simplified to this:

[A|data] --> [B|preprocessing and analysis] --> [C|model or result] --> [D|synthesize/visualize] --> [E|distribute]We are moving

Adata (the behavior we have measured, the metrics we have collected, the images we have taken) throughBpreprocessing and analysis (some tube to handle noise, use statistical techniques to say intelligent things about it) to generateC(results or a model) that we must intelligently synthesize, meaning visualization or explanation (D) by way of story (ahem, manuscript) and this leads toE, some new knowledge that improves the human condition. This is the core of an academic product.What does the ideal academic product look like?

In an ideal world, the above would be a freely flowing pipe. New data would enter the pipe that matches some criteria, and flow through preprocessing, analysis, a result, and then an updated understanding of our world. In the same way that we subscribe to social media feeds, academics and people alike could subscribe to hypothesis, and get an update when the status of the world changes. Now we move into idealistic thought that this (someday) could be a reality if we improve the way that we do science. The ideal academic product is a living thing. The scientist is both the technician and artist to come up with this pipeline, make a definition of what the input data looks like, and then provide it to the world.

The entirety of this pipeline can be modular, meaning running in containers that include all of the software and files necessary for the component of the analysis. For example, steps

A(data) andB(preprocessing and analysis) are likely to happen in a high performance computing (HPC) environment, and you would want your data and analysis containers run at scale there. There is a lot of discussion going on about using local versus “cloud” resources, and I’ll just say that it doesn’t matter. Whether we are using a local cluster (e.g., SLURM) or in Google Cloud, these containers can run in both. Other scientists can also use these containers to reproduce your steps. I’ll point you to Singularity and follow us along at researchapps for a growing example of using containers for scientific compute, along with other things.For the scope of this post, we are going to be talking about how to use containers for the middle to tail end of this pipeline. We’ve completed the part that needs to be run at scale, and now we have a model that we want to perhaps publish in a paper, and provide for others to run on their computer.

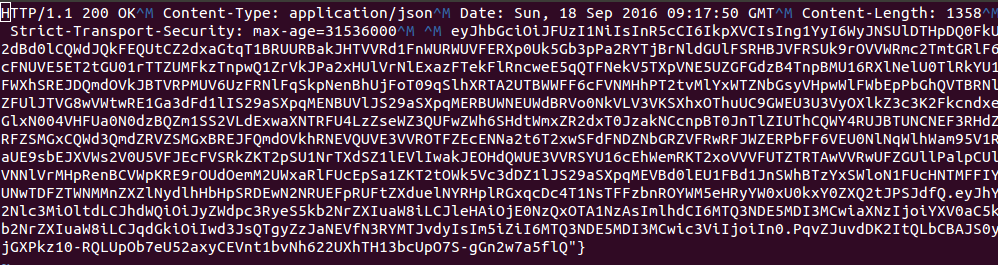

Web Servers in Singularity Containers

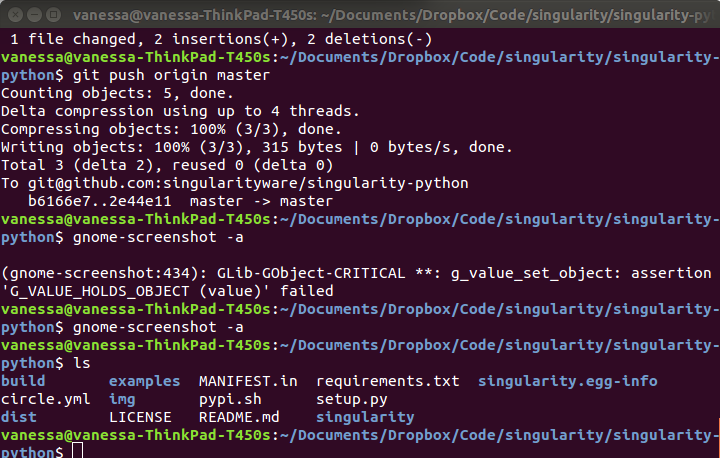

Given that we can readily digest things that are interactive or visual, and given that containers are well suited for including much more than a web server (e.g., software dependencies like Python or R to run an analysis or model that generates a web-based result) I realized that sometimes my beloved Github pages or a static web server aren’t enough for reproducibility. So this morning I had a rather silly idea. Why not run a webserver from inside of a Singularity container? Given the seamless nature of these things, it should work. It did work. I’ve started up a little repository https://github.com/vsoch/singularity-web of examples to get you started, just what I had time to do this afternoon. I’ll also go through the basics here.

How does it work?

The only pre-requisite is that you should install Singularity. Singularity is already available on just over 40 supercomputer centers all over the place. How is this working? We basically follow these steps:

- create a container

- add files and software to it

- tell it what to run

In our example here, at the end of the analysis pipeline we are interested in containing things that produce a web-based output. You could equally imagine using a container to run and produce something for a step before that. You could go old school and do this on a command by command basis, but I (personally) find it easiest to create a little build file to preserve my work, and this is why I’ve pushed this development for Singularity, and specifically for it to look a little bit “Dockery,” because that’s what people are used to. I’m also a big fan of bootstrapping Docker images, since there are ample around. If you want to bootstrap something else, please look at our folder of examples.

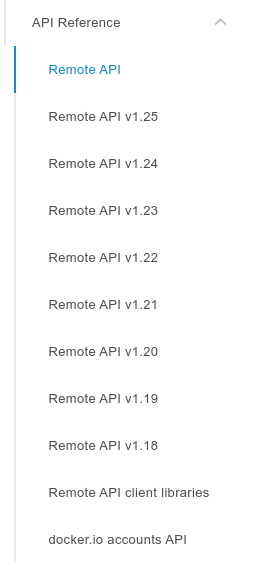

The Singularity Build file

The containers are built from a specification file called

Singularity, which is just a stupid text file with different sections that you can throw around your computer. It has two parts: a header, and then sections (%runscript,%post). Actually there are a few more, mostly for more advanced usage that I don’t need here. Generally, it looks something like this:Bootstrap: docker From: ubuntu:16.04 %runscript exec /usr/bin/python "$@" %post apt-get update apt-get -y install pythonLet’s talk about what the above means.

The Header

The First line

bootstrapsays that we are going to bootstrap adockerimage, specifically using the (Fromfield)ubuntu:16.04. What the heck is bootstrapping? It means that I’m too lazy to start from scratch, so I’m going to start with something else as a template. Ubuntu is an operating system, instead of starting with nothing, we are going to dump that into the container and then add stuff to it. You could choose another distribution that you like, I just happen to like Debian.%post

Post is the section where you put commands you want to run once to create your image. This includes:

- installation of software

- creation of files or folders

- moving data, files into the container image

- analysis things

The list is pretty obvious, but what about the last one, analysis things? Yes, let’s say that we had a script thing that we wanted to run just once to produce a result that would live in the container. In this case, we would have that thing run in

%post, and then give some interactive access to the result via the%runscript. In the case that you want your image to be more like a function and run the analysis (for example, if you want your container to take input arguments, run something, and deliver a result), then this command should go in the%runscript.%runscript

The

%runscriptis the thing executed when we run our container. For this example, we are having the container execute python, with whatever input arguments the user has provided (that’s what the weird$@means). And note that the commandexecbasically hands the current running process to this python call.But you said WEB servers in containers

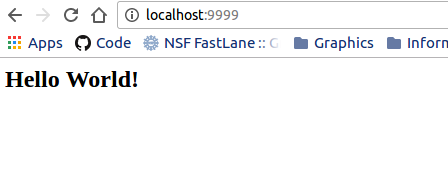

Ah, yes! Let’s look at what a

Singularityfile would look like that runs a webserver, here is the first one I put together this afternoon:Bootstrap: docker From: ubuntu:16.04 %runscript cd /data exec python3 -m http.server 9999 %post mkdir /data echo "<h2>Hello World!</h2>" >> /data/index.html apt-get update apt-get -y install python3It’s very similar, except instead of exposing python, we are using python to run a local webserver, for whatever is in the

/datafolder inside of the container. For full details, see the nginx-basic example. We change directories to data, and then use python to start up a little server on port 9999 to serve that folder. Anything in that folder will then be available to our local machine on port 9999, meaning the addresslocalhost:9999or127.0.0.1:9999.Examples

nginx-basic

The nginx-basic example will walk you through what we just talked about, creating a container that serves static files, either within the container (files generated at time of build and served) or outside the container (files in a folder bound to the container at run time). What is crazy cool about this example is that I can serve files from inside of the container, perhaps produced at container generation or runtime (in this example, my container image is called

nginx-basic.img, and by default it’s going to show me theindex.htmlthat I produced with theechocommand in the%postsection:./nginx-basic.img Serving HTTP on 0.0.0.0 port 9999 ...

or I can bind a folder on my local computer with static web files (the

.refers to the present working directory, and-Bor--bindare the Singularity bind parameters) to my container and serve them the same!singularity run -B .:/data nginx-basic.imgThe general format is either:

singularity [run/shell] -B <src>:<dest> nginx-basic.img singularity [run/shell] --bind <src>:<dest> nginx-basic.imgwhere

<src>refers to the local directory, and<dest>is inside the container.nginx-expfactory

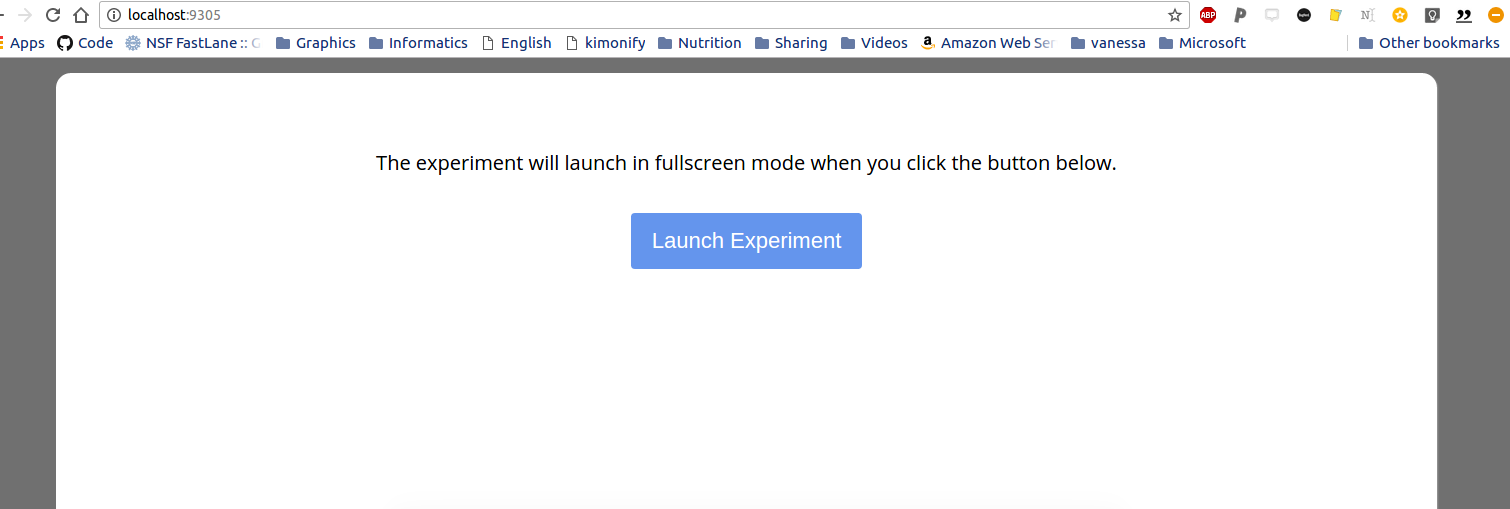

The nginx-expfactory example takes a software that I published in graduate school and shows an example of how to wrap a bunch of dependencies in a container, and then allow the user to use it like a function with input arguments. This is a super common use case for science publication type things - you want to let someone run a model / analysis with custom inputs (whether data or parameters), meaning that the container needs to accept input arguments and optionally run / download / do something before presenting the result. This example shows how to build a container to serve the Experiment Factory software, and let the user execute the container to run a web-based experiment:

./expfactory stroop No battery folder specified. Will pull latest battery from expfactory-battery repo No experiments, games, or surveys folder specified. Will pull latest from expfactory-experiments repo Generating custom battery selecting from experiments for maximum of 99999 minutes, please wait... Found 57 valid experiments Found 9 valid games Preview experiment at localhost:9684

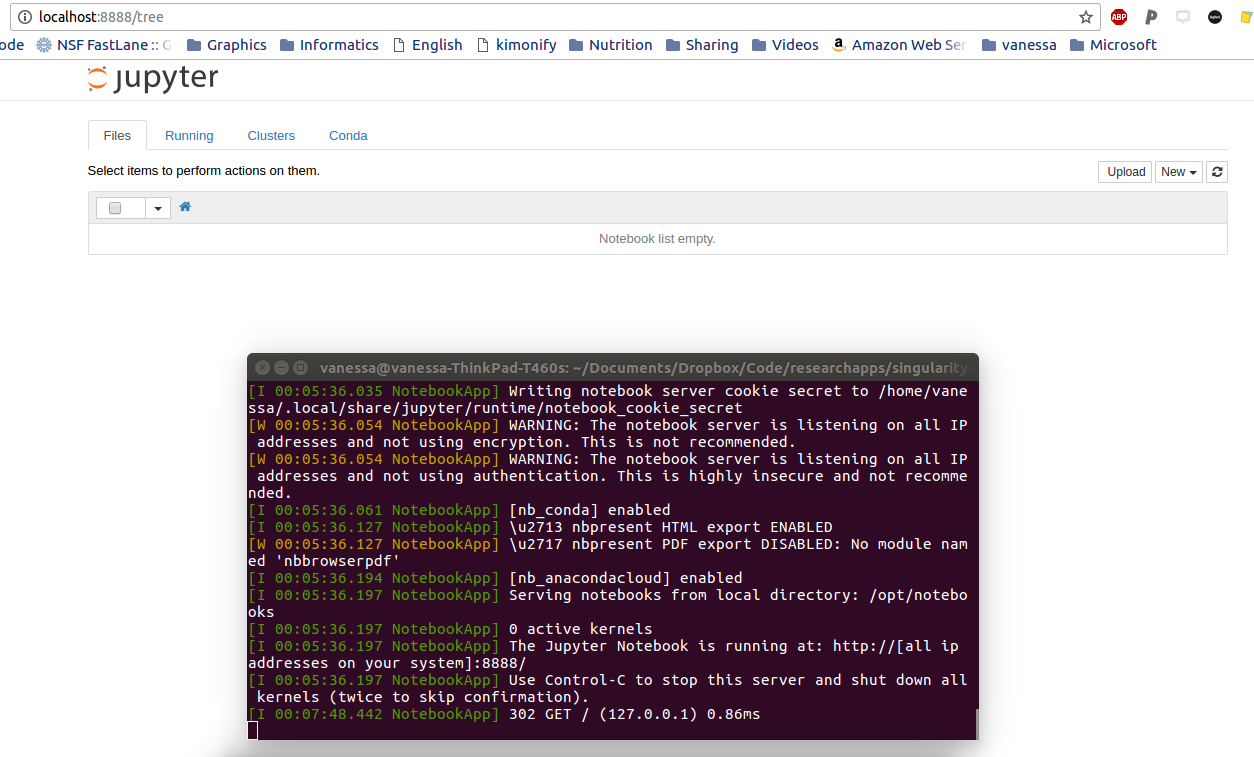

nginx-jupyter

Finally, nginx-jupyter fits nicely with the daily life of most academics and scientists that like to use Jupyter Notebooks. This example will show you how to put the entire Jupyter stuffs and python in a container, and then run it to start an interactive notebook in your browser:

The ridiculously cool thing in this example is that when you shut down the notebook, the notebook files are saved inside the container. If you want to share it? Just send over the entire thing! The other cool thing? If we run it this way:

sudo singularity run --writable jupyter.imgThen the notebooks are stored in the container at

/opt/notebooks(or a location of your choice, if you edit theSingularityfile). For example, here we are shelling into the container after shutting it down, and peeking. Are they there?singularity shell jupyter.img Singularity: Invoking an interactive shell within container... Singularity.jupyter.img> ls /opt/notebooks Untitled.ipynbYes! And if we run it this way:

sudo singularity run -B $PWD:/opt/notebooks --writable jupyter.imgWe get the same interactive notebook, but the files are plopping down into our present working directory

$PWD, which you now have learned is mapped to/opt/notebooksvia the bind command.How do I share them?

Speaking of sharing these containers, how do you do it? You have a few options!

Share the image

If you want absolute reproducibility, meaning that the container that you built is set in stone, never to be changed, and you want to hand it to someone, have them install Singularity and send them your container. This means that you just build the container and give it to them. It might look something like this:

sudo singularity create theultimate.img sudo singularity bootstrap theultimate.img SingularityIn the example above I am creating an image called

theultimate.imgand then building it from a specification file,Singularity. I would then give someone the image itself, and they would run it like an executable, which you can do in many ways:singularity run theultimate.img ./theultimate.imgThey could also shell into it to look around, with or without sudo to make changes (breaks reproducibility, your call, bro).

singularity shell theultimate.img sudo singularity shell --writable theultimate.imgShare the build file Singularity

In the case that the image is too big to attach to an email, you can send the user the build file

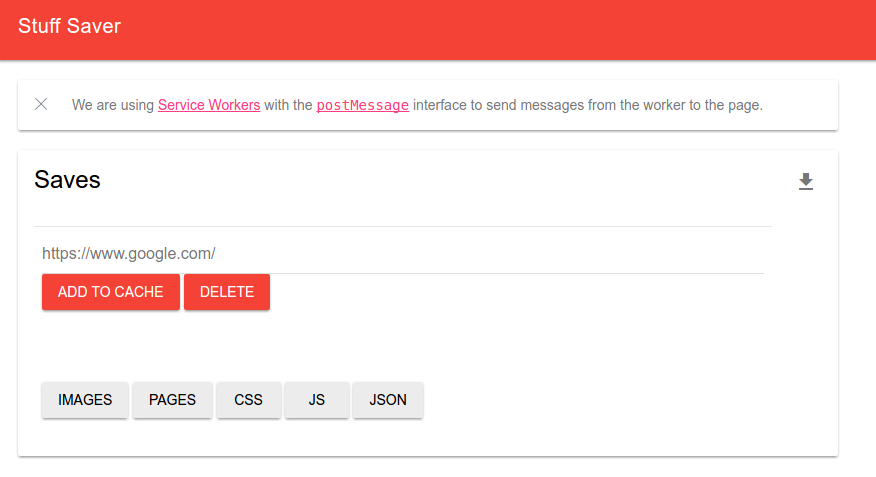

Singularityand he/she can run the same steps to build and run the image. Yeah, it’s not the exact same thing, but it’s captured most dependencies, and granted that you are using versioned packages and inputs, you should be pretty ok.Singularity Hub

Also under development is a Singularity Hub that will automatically build images from the

Singularityfiles upon pushes to connected Github repos. This will hopefully be offered to the larger community in the coming year, 2017.Why aren’t we achieving this?

I’ll close with a few thoughts on our current situation. A lot of harshness has come down in the past few years on the scientific community, especially Psychology, for not practicing reproducible science. Having been a technical person and a researcher, my opinion is that it’s asking too much. I’m not saying that scientists should not be accountable for good practices. I’m saying that without good tools and software, doing these practices isn’t just hard, it’s really hard. Imagine if a doctor wasn’t just required to understand his specialty, but had to show up to the clinic and build his tools and exam room. Imagine if he also had to cook his lunch for the day. It’s funny to think about this, but this is sort of what we are asking of modern day scientists. They must not only be domain experts, manage labs and people, write papers, plead for money, but they also must learn how to code, make websites and interactive things, and be linux experts to run their analyses. And if they don’t? They probably won’t have a successful career. If they do? They probably still will have a hard time finding a job. So if you see a researcher or scientist this holiday season? Give him or her a hug. He or she has a challenging job, and is probably making a lot of sacrifices for the pursuit of knowledge and discovery.

I had a major epiphany during the final years of my graduate school that the domain of my research wasn’t terribly interesting, but rather, the problems wrapped around doing it were. This exact problem that I’ve articulated above - the fact that researchers are spread thin and not able to maximally focus on the problem at hand, is a problem that I find interesting, and I’ve decided to work on. The larger problem, that tools for researchers, because it’s not a domain that makes money, or that there is an entire layer of research software engineers missing from campuses across the globe, is also something that I care a lot about. Scientists would have a much easier time giving us data pipes if they were provided with infrastructure to generate them.

How to help?

If you use Singularity in your work, please comment here, contact me directly, or to researchapps@googlegroups.com so I can showcase your work! Please follow along with the open source community to develop Singularity, and if you are a scientist, please reach out to me if you need applications support.

· -

Tweezers

It was around my 13th birthday when my mom gave me the gift of self-scrutiny. It was an electronic makeup mirror, a clone of her own, that lit up and magnified my face from both sides with an array of daylight themes. Did I want an evening tone? There was soft blue for that. Morning? A yellow, fresh hue. With this mirror came my very own pair of tweezers - the most important tool to commence the morning ritual of plucking away hairs and insecurities. I had watched her do it for my entire childhood, and it was calming in a way. She would be fresh from bath or shower, sometimes in a kerchief, sometimes with hair tied back, and sit down in a tiny chair in front of a mirror parked front and center at a little table with an opening for her legs and a three paneled mirror behind that. If it were a painting, it could be called a triptych. She would first embark on the arduous task of removing any deviant hairs. I remember my hopes for an equivalently flawless and hairfree nose line were shattered when she told me that if I plucked for 20 or more years, they just wouldn’t grow back. That’s a long time when you are 13. At some point she would apply a palm’s dabble of an Estee Lauder white cream - a smooth, sweet smelling cream that I watched in my lifetime go from $40 to $44 a bottle, and likely is now higher. It was, she told me, the secret to her flawless skin. I’m now more convinced it’s some Puerto Rican gene, but it’s a moot point. This cream had a shiny, rounded gold screw top that accented the rectangular, glassy white bottle. The version made years ago had a subtle cream tint to it, and the more modern one is a silkier white. I stopped using it in favor of a non-scented $3.99 bottle of face cream from Trader Joe’s at the onset of graduate school when my allergic-ness kicked in, and the fancy stuff started to give me rashes.

But back to that memory. The smell of the cream and removal of hairs from nose, brow, and (later in her life, chin) was followed by the opening of the makeup drawer. What treasures lay inside! She had brow pencils, an entire section of lipsticks, terrifying black wands that I learned to be mascara, and lip liners. She would first apply a fine line of some kind of “under eye” concealer, to hide any appearance of bags from stress or tiredness. My Mom had (and still has) amazing nails. They were long and clean, and she could jab a nail into the creamy beige stick, and then swipe it under each eye to get a perfect line. A quick rub then would dissolve the line into her skin. The eyes were then complete with a swipe of a dark liner and shadow. The process of applying lipstick was my favorite to watch, because it was like a coloring book on your face, and I could always smell the weird scent of the stuff. She would draw a line cleanly around the lip, sometimes too far over the top, and then color it in like a master painter. It was a brave commitment, because it meant that the lipstick would likely wear off toward the end of the day, leaving only the liner. Never to fear! This is why a lipstick was never far away in a purse, and a husband or child close by to ask about the appearance of the liner. She told me many times growing up that her mother never wore lipstick, and told her it (in more proper words) made her too becoming to men. When I refused to touch it, she told me that it was one of those things that skips generations. I’ll never have a daughter so I don’t have to find out, but I can imagine there is a strong propensity to not do some of the things that you observe your Mom to do. When the face and makeup were complete, the hair would fall out of the kerchief, and my Mom was flawless. Me on the other hand, well here I was in all my unibrow glory:

And there I was. I put my little setup on the floor of my childhood room, and plugged the mirror into the wall and directly under my bulletin board scattered with childhood photos and Hello Kitty lights. I would finish my two Eggo waffles, cut into perfect squares with just the right amount of syrup in the crevices, and go straight up the stairs to start my own ritual. Coming armed with the contribution of my Dad’s gene pool, I had much thicker and darker lashes and eyebrows, and so my uni-brow was extra Frida like. It’s funny how before adolescence, I had never really noticed it, and then it was immediately a marker of some kind of gross, ethnic hair. I painstakingly removed it, many times plucking out too many hairs. The damn caterpillar always grew back in full force, and the voice of my mom… “20 years…” repeated in my head. As for makeup, it never clicked with me. I went through an eye shadow phase in 8th grade, and went nuts with colors, dusts, and creams, and this was also when eye glitter from the store “The Icing” was the rage. The silly little screw-top bottles had this strange goo inside that would completely dry up when left even the slightest bit open. The nicer ones I had were “roll on” style, usually the “Smackers” brand. I still have some of those chapsticks and various teenager “makeup” - they are sitting in my apartment organized in a plastic bin from college one room over. It’s really the texture and scent that I kept them for - it’s an immediate warp back into the past. As for eye mascara, my first (and last) experience was with some sort of waterproof version, which I tried from my friend Kara at a sports club called Hampshire Hills in middle school. I didn’t know about makeup remover, and in sheer horror when I couldn’t get it off and resorted to using fingers to pull out my eyelashes, I never touched it again. I still won’t.

When I entered college, I didn’t bring that mirror, and was long over any kind of makeup beyond chapstick and tinted pimple cream. “Pimples” I’ve come to learn are actually pretty unlikely for many, but brought on by anxiety and some maladaptive desire to pick at imperfections. It’s a constant struggle, but I’m getting better! I brought those tweezers along, and every few years my Mom would buy me a new pair - always a really nice pair - because the ones in the drug store weren’t suitable for the task. Did I stick to my duty? Sort of, kind of. A combination of laziness and lacking setup for my ritual led to the abolishment of the daily plucking in exchange for a weekly or bi-weekly grooming. I went through phases of forgetting, and finally, not really caring, and only doing some touch up if there was a formal event like a graduation or wedding.

I just turned 30. It’s now been (almost) those 20 years (and well, I haven’t been true to my duty), but the hairs always came back. At some point in graduate school, I just stopped caring. The higher level awareness of this entire experience is the realization that this focus, this kind of ritual that derives self-worth from obtaining a particular appearance and going to extremes to achieve it, is just ridiculous. Nobody has to do this crap. It took me years to stumble on this, matter of fact I only derived this insight when I found my groove, and I found my meaning, both personally and in the things I am passionate about doing. Maybe it is some kind of “growing up” milestone, but if we had to put eggs in baskets to correspond with how we evaluate ourselves, I just don’t care to put any eggs in the “how do my eyebrows look right now?” and “should I gussy up a bit?” baskets. It’s much more fun to be silly, express your ideas and personality with intensity, and it doesn’t matter if the hairs on your face are groomed are not. When I’m tired because I’ve worked hard in a day, you are going to see that, and it’s just going to be that. As I age, you are going to know and see me, and not some mask that I hide over me to meet some idealistic plastic version of myself. Everyone else is too busy with their own insecurity and heavy self-awareness to care. This self-realization that sort of happened, but came to awareness after the fact, has been empowering on many levels. I can look at the tweezer in my bathroom, have awareness of a fast fading ritualistic memory, and then just walk away. And now I want the opposite - I am rooting for every hair on my face to grow back in all its little glory!

This post is dedicated to my Mom. As a grown up now myself, I wish, in retrospect, that she would have seen and appreciated her beauty without the makeup on - because it seems too easy to get used to a red lip, or a shadowed eye, and feel lacking without them. Mom - the times when you didn’t want to be seen outside with your Pajamas on, when you checked your lipstick in the car mirror just to be safe, or when you put on makeup in the middle of the night because you felt vulnerable, you didn’t need to do that. Radiating your inner energy and joy would have been the light that blinded people from any imperfection in your skin that was possible to see. I know that I will be loved, and I will be the same, irrespective of these things. It is not the look of a face that gives inner beauty, and so to close this post, I do not wish to validate the societal standard of evaluating women based on looks by saying something about looks. Mom - with and without your makeup, with and without your insecurities, you are beautiful. As your hair gets white, and your face more aged, I hope you can really feel that. I know that I do.

· -

Python Environments, A User Guide

Do you want to run Python? I can help you out! This documentation is specific to the

farmshare2cluster at Stanford, on which there are several versions on python available. The python convention is that python v2 is called ‘python’, and python v3 is called ‘python3’. They are not directly compatible, and in fact can be thought of as entirely different software.How do I know which python I’m calling?

Like most Linux software, when you issue a command to execute some software, you have a variable called

$PATHthat loads the first executable it finds with that name. The same is true forpythonandpython3. Let’s take a look at some of the defaults:# What python executable is found first? rice05:~> which python /usr/bin/python # What version of python is this? rice05:~> python --version Python 2.7.12 # And what about python3? rice05:~> which python3 /usr/bin/python3 # And python3 version rice05:~> python3 --version Python 3.5.2This is great, but what if you want to use a different version? As a reminder, most clusters like Farmshare2 come with packages, modules, and can also be installed with your custom software (here’s a refresher if you need it). Let’s talk about the different options for extending the provided environments, or creating your own environment. First, remember that for all of your scripts, the first line instructs what executable to use. So make sure to have this at the top of your script:

#!/usr/bin/env pythonNow, what to do when the default python doesn’t fit your needs? You have many choices:

- Install to a User Library if you want to continue using a provided python, but add a module of your choice to a personal library

- Install a conda environment if you need standard scientific software modules, and don’t want the hassle of compiling and installing them.

- Create a virtual environment if you want more control over the version and modules

1. Install to a User Library

The reason that you can’t install to the shared

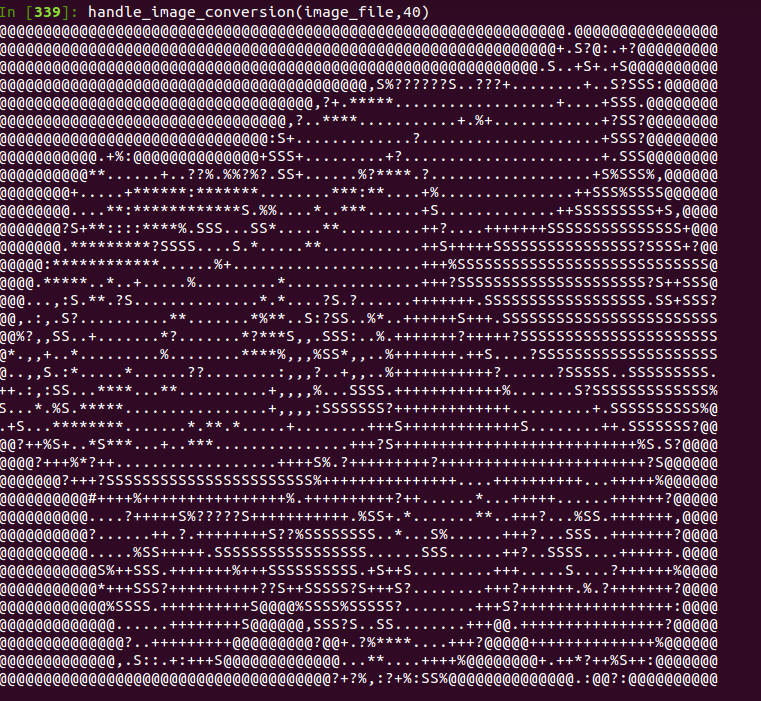

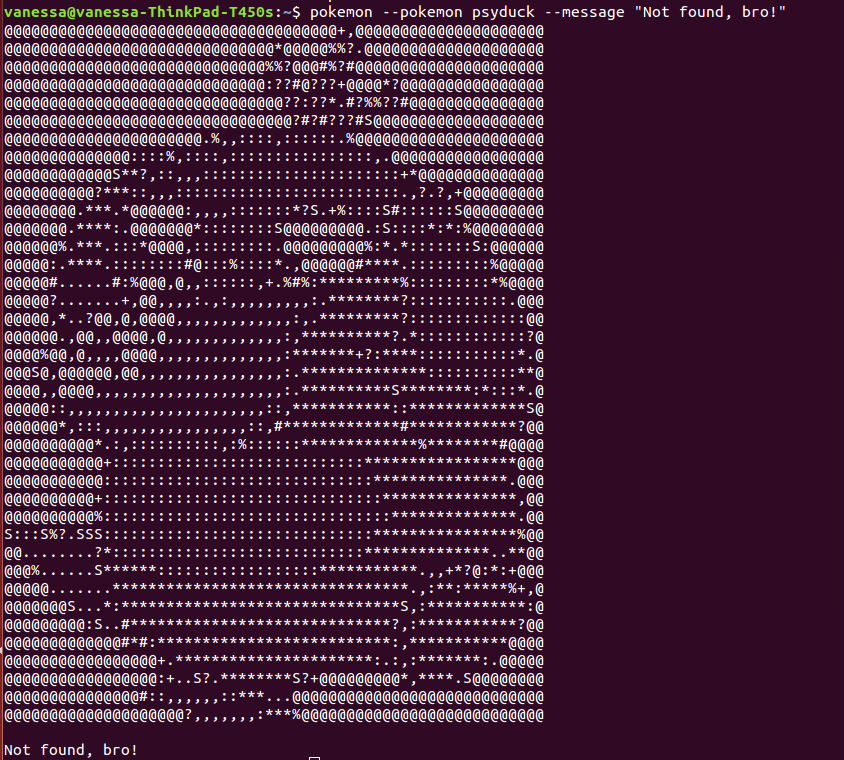

pythonorpython3is because you don’t have access to thesite-packagesfolder, which is where the modules are looked for automatically by python. But don’t despair! You can install to your (very own)site-packagesby simply appending the--userargument to the install command. For example:# Install the pokemon-ascii package pip install pokemon --user # Where did it install to? rice05:~> python Python 2.7.12 (default, Jul 1 2016, 15:12:24) [GCC 5.4.0 20160609] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> import pokemon >>> pokemon.__file__ '/home/vsochat/.local/lib/python2.7/site-packages/pokemon/__init__.pyc'As you can see above, your

--userpackages install to a site packages folder for the python version under.local/lib. You can always peek into this folder to see what you have installed.rice05:~> ls $HOME/.local/lib/python2.7/site-packages/ nibabel pokemon virtualenv.py nibabel-2.1.0.dist-info pokemon-0.32.dist-info virtualenv.pyc nisext virtualenv-15.0.3.dist-info virtualenv_supportYou probably now have two questions.

- How does python know to look here, and

- How do I check what other folders are being checked?

How does Python find modules?

You can look at the

sys.pathvariable, a list of paths on your machine, to see where Python is going to look for modules:rice05:~> python Python 2.7.12 (default, Jul 1 2016, 15:12:24) [GCC 5.4.0 20160609] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> import sys >>> sys.path ['', '/usr/lib/python2.7', '/usr/lib/python2.7/plat-x86_64-linux-gnu', '/usr/lib/python2.7/lib-tk', '/usr/lib/python2.7/lib-old', '/usr/lib/python2.7/lib-dynload', '/home/vsochat/.local/lib/python2.7/site-packages', '/usr/local/lib/python2.7/dist-packages', '/usr/lib/python2.7/dist-packages']Above we can see that the system libraries are loaded before local, so if you install a module to your user folder, it’s going to be loaded after. Did you notice that the first entry is an empty string? This means that your present working directory will be searched first. If you have a file called

pokemon.pyin this directory and then you doimport pokemon, it’s going to use the file in the present working directory.How can I dynamically change the paths?

The fact that these paths are stored in a variable means that you can dynamically add / tweak paths in your scripts. For example, when I fire up

python3and load numpy, it uses the first path found insys.path:>>> import numpy >>> numpy.__path__ ['/usr/lib/python3/dist-packages/numpy']And I can change this behavior by removing or appending paths to this list before importing. Additionally, you can add paths to the environmental variable

$PYTHONPATHto add folders with modules (read about PYTHONPATH here). First you add the variable to the path:# Here is setting an environment variable with csh rice05:~> setenv PYTHONPATH /home/vsochat:$PYTHONPATH # And here with bash rice05:~> export PYTHONPATH=/home/vsochat:$PYTHONPATH # Did it work? rice05:~> echo $PYTHONPATH /home/vsochatNow when we run python, we see the path has been appended to the beginning of

sys.path:rice05:~> python Python 2.7.12 (default, Jul 1 2016, 15:12:24) [GCC 5.4.0 20160609] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> import sys >>> sys.path ['', '/home/vsochat', '/usr/lib/python2.7', '/usr/lib/python2.7/plat-x86_64-linux-gnu', '/usr/lib/python2.7/lib-tk', '/usr/lib/python2.7/lib-old', '/usr/lib/python2.7/lib-dynload', '/home/vsochat/.local/lib/python2.7/site-packages', '/usr/local/lib/python2.7/dist-packages', '/usr/lib/python2.7/dist-packages']Awesome!

How do I see more information about my modules?

You can look to see if a module has a

__version__, a__path__, or a__file__, each of which will tell you details that you might need for debugging. Keep in mind that not every module has a version defined.rice05:~> python Python 2.7.12 (default, Jul 1 2016, 15:12:24) [GCC 5.4.0 20160609] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> import numpy >>> numpy.__version__ '1.11.0' >>> numpy.__file__ '/usr/lib/python2.7/dist-packages/numpy/__init__.pyc' >>> numpy.__path__ ['/usr/lib/python2.7/dist-packages/numpy'] >>> numpy.__dict__If you are really desperate for seeing what functions the module has available, take a look at (for example, for numpy)

numpy.__dict__.keys(). While this doesn’t work on the cluster, if you load a module in iPython you can press TAB to autocomplete for available options, and add a single or double_to see the hidden ones like__path__.How do I ensure that my package manager is up to date?

We’ve hit a conundrum! How does one “pip install pip”? And further, how do we ensure we are using the pip version associated with the currently active python? The same way that you would upgrade any other module, using the

--upgradeflag:rice05:~> python -m pip install --user --upgrade pip rice05:~> python -m pip install --user --upgrade virtualenvAnd note that you can do this for virtual environments (

virtualenv) as well.2. Install a conda environment

There are a core set of scientific software modules that are quite annoying to install, and this is where anaconda and miniconda come in. These are packaged virtual environments that you can easily install with pre-compiled versions of all your favorite modules (numpy, scikit-learn, pandas, matplotlib, etc.). We are going to be following instructions from the miniconda installation documentation. Generally we are going to do the following:

- Download the installer

- Run it to install, and install to our home folder

- (optional) add it to our path

- Install additional modules with conda

First get the installer from here, and you can use

wgetto download the file to your home folder:rice05:~> cd $HOME rice05:~> wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh # Make it executable rice05:~> chmod u+x Miniconda3-latest-Linux-x86_64.shThen run it! If you do it without any command line arguments, it’s going to ask you to agree to the license, and then interactively specify installation parameters. The easiest thing to do is skip this, using the

-bparameter will automatically agree and install tominiconda3in your home directory:rice05:~> ./Miniconda3-latest-Linux-x86_64.sh -b PREFIX=/home/vsochat/miniconda3 ... (installation continues here)If you want to add the miniconda to your path, meaning that it will be loaded in preference to all other pythons, then you can add it to your .profile:

echo "export PATH=$HOME/miniconda3/bin:$PATH >> $HOME/.profile"Then source your profile to make the python path active, or log in and out of the terminal to do the same:

source /home/vsochat/.profileFinally, to install additional modules to your miniconda environment, you can use either conda (for pre-compiled binaries) or the pip that comes installed with the miniconda environment (in the case that the conda package managed doesn’t include it).

# Scikit learn is included in the conda package manager /home/vsochat/miniconda3/bin/conda install -y scikit-learn # Pokemon ascii is not /home/vsochat/miniconda3/bin/pip install pokemon3. Install a virtual environment

If you don’t want the bells and whistles that come with anaconda or miniconda, then you probably should go for a virtual environment. The Hitchhiker’s Guide to Python has a great introduction, and we will go through the steps here as well. First, let’s make sure we have the most up to date version for our current python:

rice05:~> python -m pip install --user --upgrade virtualenvSince we are installing this to our user (

.local) folder, we need to make sure the bin (with executables for the install) is on our path, because it usually won’t be:# Ruhroh! rice05:~/myproject> which virtualenv virtualenv: Command not found. # That's ok, we know where it is! rice05:~/myproject> export PATH=/home/vsochat/.local/bin:$PATH # (and for csh) rice05:~/myproject> setenv PATH /home/vsochat/.local/bin:$PATH # Did we add it? rice05:~/myproject> which virtualenv /home/vsochat/.local/bin/virtualenvYou can also add this to your

$HOME/.profileif you want it sourced each time.Now we can make and use virtual environments! It is as simple as creating it, and activating it:

rice05:~>mkdir myproject rice05:~>cd myproject rice05:~/myproject> virtualenv venv New python executable in /home/vsochat/myproject/venv/bin/python Installing setuptools, pip, wheel...done. rice05:~/myproject> ls venvTo activate our environment, we use the executable

activatein the bin provided. If you take a look at the files inbin, there is an activate file for each kind of shell, and there is also the executables forpythonand the package managerpip:rice05:~/myproject> ls venv/bin/ activate activate_this.py pip python python-config activate.csh easy_install pip2 python2 wheel activate.fish easy_install-2.7 pip2.7 python2.7Here is how we would active for csh:

rice05:~/myproject> source venv/bin/activate.csh [venv] rice05:~/myproject>Notice any changes? The name of the active virutal environment is added to the terminal prompt! Now if we look at the python and pip versions running, we see we are in our virtual environment:

[venv] rice05:~/myproject> which python /home/vsochat/myproject/venv/bin/python [venv] rice05:~/myproject> which pip /home/vsochat/myproject/venv/bin/pipAgain, you can add the source command to your

$HOME/.profileif you want it to be loaded automatically on login. From here you can move forward with usingpython setup.py install(for local module files) andpip install MODULEto install software to your virtual environment.To exit from your environment, just type

deactivate:[venv] rice05:~/myproject> deactivate rice05:~/myproject>PROTIP You can specify commands to your virtualenv creation to include the system site packages in your environment. This is useful for modules like numpy that require compilation (lib/blas, anyone?) that you don’t want to deal with:

rice05:~/myproject> virtualenv venv --system-site-packagesReproducible Practices

Whether you are a researcher or a software engineer, you are going to run into the issue of wanting to share your code, and someone on a different cluster running it. The best solution is to container-ize everything, and for this we recommend using Singularity. However, let’s say that you’ve been a bit disorganized, and you want to quickly capture your current python environment either for a requirements.txt file, or for a container configuration? If you just want to glance and get a “human readable” version, then you can do:

rice05:~> pip list biopython (1.66) decorator (4.0.6) gbp (0.7.2) nibabel (2.1.0) numpy (1.11.0) pip (8.1.2) pokemon (0.32) Pyste (0.9.10) python-dateutil (2.4.2) reportlab (3.3.0) scipy (0.18.1) setuptools (28.0.0) six (1.10.0) virtualenv (15.0.1) wheel (0.29.0)If you want your software printed in the format that will populate the

requirement.txtfile, then you want:rice05:~> pip freeze biopython==1.66 decorator==4.0.6 gbp==0.7.2 nibabel==2.1.0 numpy==1.11.0 pokemon==0.32 Pyste==0.9.10 python-dateutil==2.4.2 reportlab==3.3.0 scipy==0.18.1 six==1.10.0 virtualenv==15.0.1And you can print this right to file:

·# Write to new file rice05:~> pip freeze > requirements.txt # Append to file rice05:~> pip freeze >> requirements.txt -

The Constant Struggle

· -

Contained Environments for Software for HPC

I was recently interested in doing what most research groups do, setting up a computational environment that would contain version controlled software, and easy ways for users in a group to load it. There are several strategies you can take. Let’s first talk about those.

Strategies for running software on HCP

Use the system default

Yes, your home folder is located on some kind of server with an OS, and whether RHEL, CentOS, Ubuntu, or something else, it likely comes with, for example, standard python. However, you probably don’t have any kind of root access, so a standard install (let’s say we are installing the module

pokemon) like any of the following won’t work:# If you have the module source code, with a setup.py python setup.py install # Install from package manager, pip pip install pokemon # use easy_install easy_install pokemonEach of the commands above would attempt to install to the system python (something like

/usr/local/lib/pythonX.X/site-packages/) and then you would get a permission denied error.OSError: [Errno 13] Permission denied: '/usr/local/lib/python2.7/dist-packages/pokemon-0.1-py2.7.egg/EGG-INFO/entry_points.txt'Yes, each of the commands above needs a sudo, and you aren’t sudo, so you can go home and cry about it. Or you can install to a local library with something like this:

# Install from package manager, pip, but specify as user pip install pokemon --userI won’t go into details, but you could also specify a –prefix to be some folder you can write to, and then add that folder to your