I’ve been putting off for some time working on an integration that will combine Singularity with vulnerability scanning, specifically discussed for Singularity Registry Server ) where one of my colleagues that I’ve enjoyed working with immensely in the past (and have a lot of respect for!) got us started, and the issue got side-tracked with adding a general plugin framework to the registry. This particular Clair integration for the registry isn’t ready yet (the Globus integration Pull Request in in the queue first, needing review!) but in the meantime I created a small docker-compose application, stools or “Singularity Tools” and an example usage with Travis CI to bring true continuous vulnerability scanning for a container recipe in Github or a container served by Docker Hub or Singularity Hub! In this post I’ll walk through how to set this up.

Too long didn’t read

- stools builds a Docker container that can run ClairOS checks for a Singularity container

- With Travis you can perform vulnerability scanning on every push, or at a scheduled interval

- The checks can be for one or more recipes, or containers already existing on Docker or Singularity Hub

Awesome! Here it is in action. I recorded this after not sleeping an entire night, so apologies for my sleepy dinosaur typing:

If you want to skip the prose,

- go straight to the example Travis code

- contribute to the development of stools at singularityhub/stools

- or see the example testing on Travis

More information is provided below.

Singularity Tools for Continuous Integration

“stools” means “Singularity Tools for Continuous Integration.” It’s a tongue-in-cheek name, because I’m well aware of what it sounds like, and in fact we would hope that tools like this can help you to clear that stuff out from your containers :).

How does it work?

Singularity doesn’t do much here, we simply export a container to a .tar.gz and then present it as a layer to be analyzed by Clair OS.

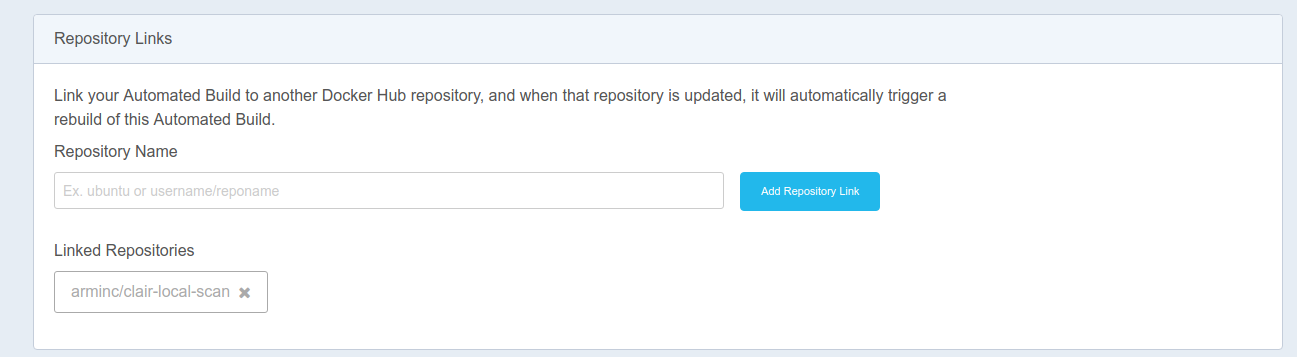

We use arminc/clair-local-scan

base that will grab a daily update of security vulnerabilities. The container used in the .travis.yml should actually be updated with the most recent vulnerabilities. How? @arminc has set up his continuous integration to be triggered by a cron job, so that it happens on a regular basis. This means that his CI setup triggers a build on Docker Hub, and my Docker Hub repository is also configured to trigger at this same time! Specifically, my repository

builds a container that uses the clair-local-scan as a base, installs production Singularity, and then the package served in the repo called stools. How does the updating work without me needing to do much? I found a section in settings called “Build Triggers” and was able to add the

arminc/clair-local-scan Docker Hub repository:

Which means his hard work to rebuild the clair-local-scan with updated vulnerabilities will also build an updated vanessa/stools-clair, which is then pulled via the docker-compose when your continuous integration runs. Again, very cool! This is like, Matrix level continuous integration!

Instructions

- Add the .travis.yml file to your Github repository with one or more Singularity recipes

- Ensure your recipe location(s) are specified correctly (see below) depending on if you want to build or pull.

- Connect your repository to Travis (instructions), and optionally set up a cron job for continuous vulnerability testing.

The .travis.yml file is going to retrieve the docker-compose.yml (a configuration to run Docker containers) from the stools repo, start the needed containers clair-db and clair-scanner, and then issue commands to clair-scanner to build or pull, and then run the scan with the installed utility sclair. I like this name too, it almost sounds like some derivation of “eclair” nomnomnom. The entire file looks like this:

sudo: required

language: ruby

services:

- docker

before_install:

- wget https://raw.githubusercontent.com/singularityhub/stools/master/docker-compose.yml

script:

# Bring up necessary containers defined in docker-compose retrieved above

- docker-compose up -d clair-db

- docker-compose up -d clair-scanner

- sleep 3

# Perform the build from your Singularity file, we are at the base of the repo

- docker exec -it clair-scanner singularity build container.simg Singularity

# Run sclair in the container to perform the scan

- docker exec -it clair-scanner sclair container.simg

There are many ways you can customize this simple file for your continuous vulnerability scanning! Let’s discuss them next.

Set up cron jobs

Cron is a linux based scheduler that let’s you tell your computer “perform this task at this particular interval.” On Travis, they added a

cron scheduler some time

ago that I was excited to use, but didn’t have a strong use case at the time. What it means is that we can have our continuous integration (the instructions defined in the travis.yml) to run at some frequency. How to do that?

- Navigate to the project settings page, usually at **https://travis-ci.org/[organization]/[repo]/settings**

- Under "Cron Jobs" select the branch and interval that you want the checks to run, and then click "Add"

And again, that’s it!

Change the recipe file

If you have a Singularity recipe in the base of your repository, you should not need to change the .travis.yml example file. If you want to change it to include one or more recipes in different locations, then you will want to change this line:

# Perform the build from your Singularity file, we are at the base of the repo

- docker exec -it clair-scanner singularity build container.simg Singularity

Where is says Singularity you might change it to path/in/repo/Singularity.

Add another recipe file

You aren’t limited to the number of containers you can build and test! You can build more than one, and test the resulting containers, like this:

- docker exec -it clair-scanner singularity build container1.simg Singularity

- docker exec -it clair-scanner singularity build container2.simg Singularity.two

- docker exec -it clair-scanner sclair container1.simg container2.simg

In the example above, we are building two containers, each from a different Singularity file in the repository. Singularity is installed in the clair-scanner Docker image, so we are free to use it.

Pull a container

If you don’t want to build here, you can use Continuous Integration just for vulnerability scanning of containers built elsewhere. Let’s say we have a repository with just a travis file, we can actually use it to test all of our Docker images (converted to Singularity) or containers hosted on Singularity Hub. That might look like this:

- docker exec -it clair-scanner singularity pull --name vsoch-hello-world.simg shub://vsoch/hello-world

- docker exec -it clair-scanner singularity pull --name ubuntu.simg docker://ubuntu:16.04

- docker exec -it clair-scanner sclair vsoch-hello-world.simg ubuntu.simg

In the example above, we are pulling the first container from Singularity Hub and the second from Docker Hub, and testing

them both for vulnerabilities, again with the executable sclair in the container clair-scanner (It is named in the docker-compose.yml file).

Rename the Output

You might also change the name of the output image (container.simg). Why? Imagine that you are using Travis to build, test, and then upon success, to upload to some container storage. Check out different ways you can deploy from Travis, for example.

Feedback Wanted!

Or do something else entirely different! I’ve provided a very slim usage example that just spits out a report to the console during testing. Let’s talk now about what we might do next. I won’t proactively do these things until you poke me, so please do that! Here are some questions to get you started:

- Under what conditions might we want a build to fail testing?

- Would you like a CirciCI example? Artifacts?

- Is it worth having some kind of message sent back to Github (would require additional permissions)?

- Circle has support for artifacts. How might you want results presented?

Notably, the application produces reports in json that are printed as text on the screen. The json means that we can easily plug them into a nice rendered web interface, and given artifacts on CircleCI, we could get a nice web report for each run. Would you like to see this? What kind of reports would be meaningful to you? How do you intend or want to respond to the reports?

Please let me know your feedback!

Suggested Citation:

Sochat, Vanessa. "Continuous Vulnerability Testing." @vsoch (blog), 27 Apr 2018, https://vsoch.github.io/2018/stools-clair/ (accessed 01 Jul 25).